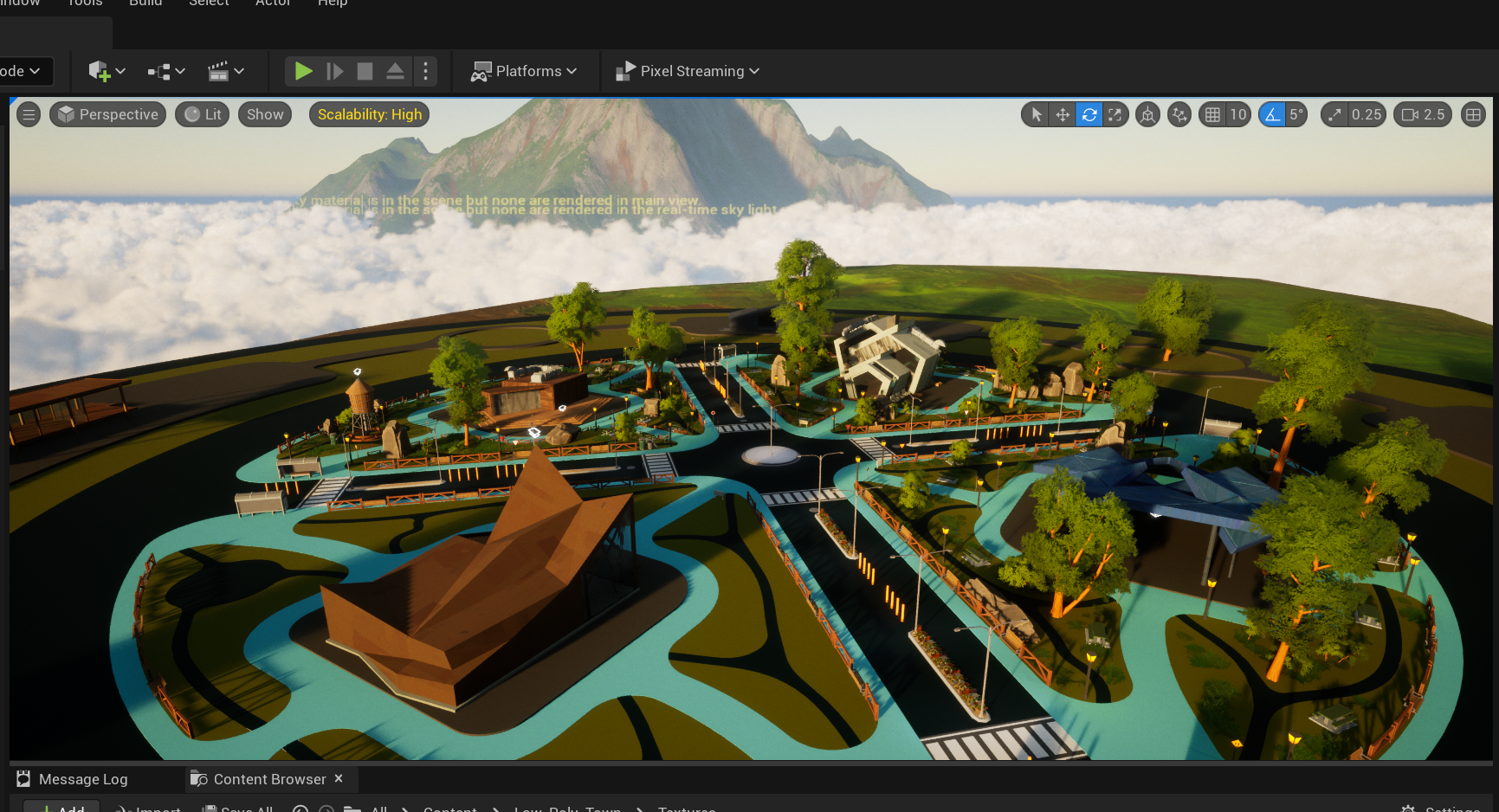

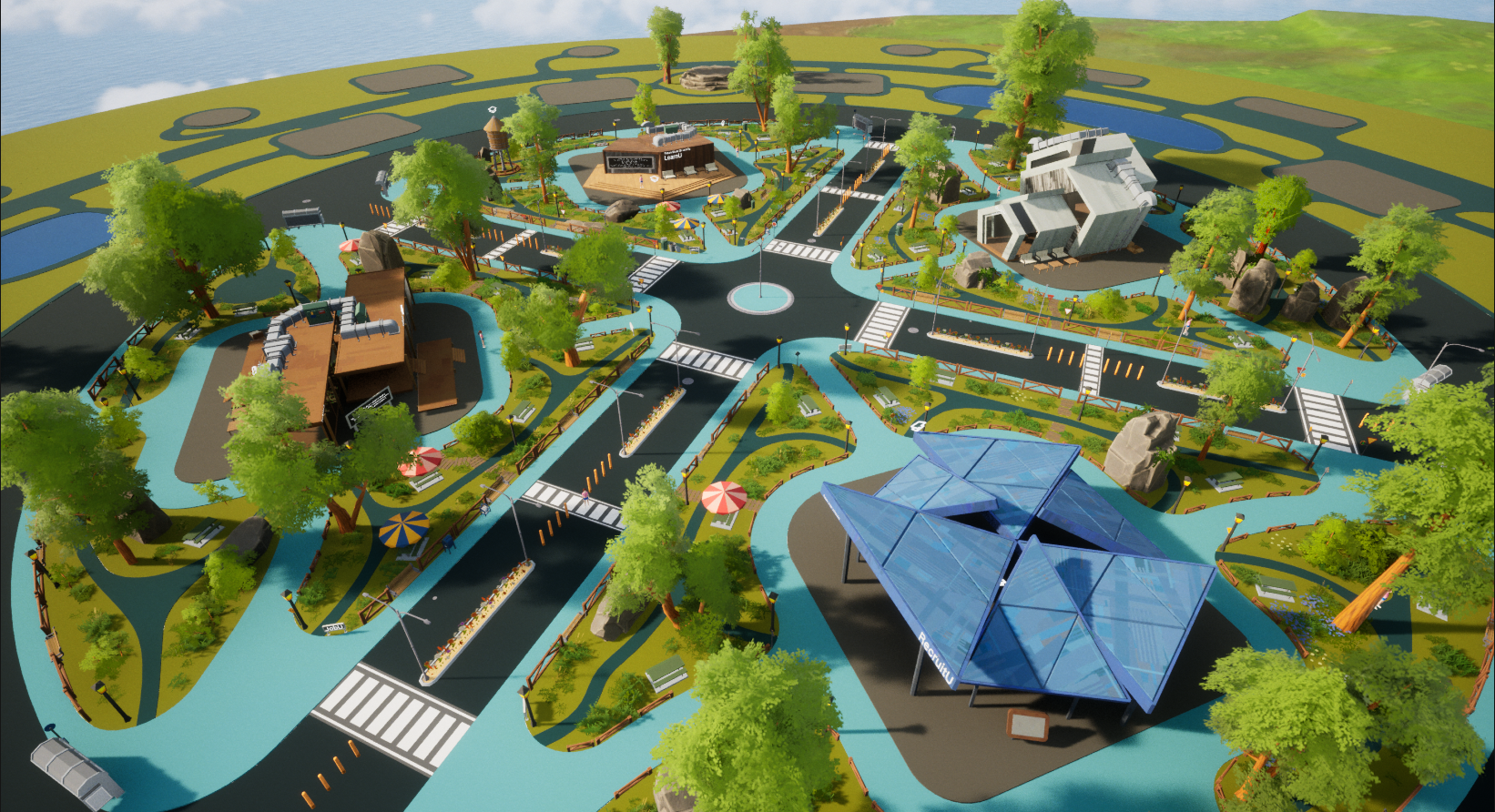

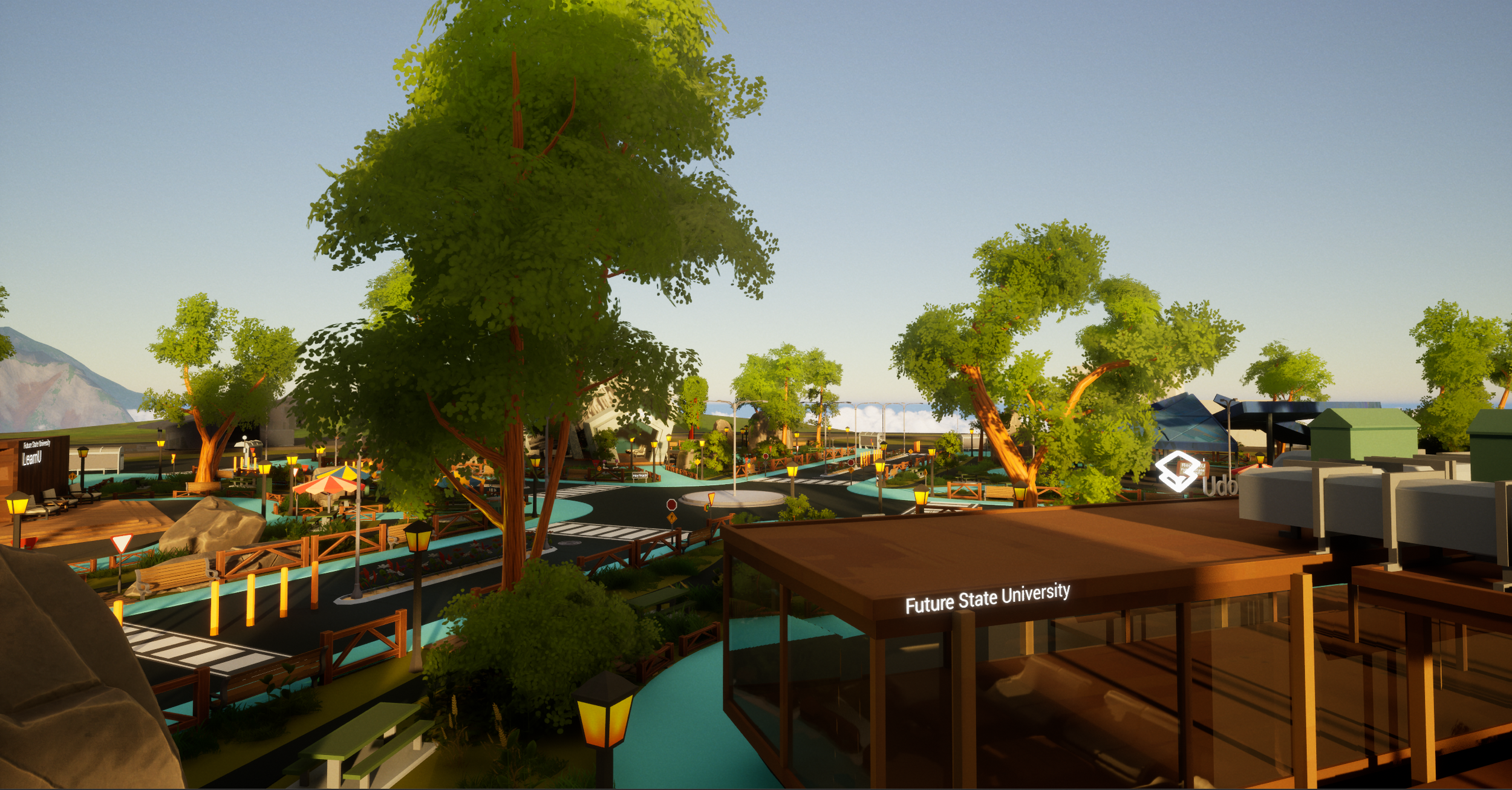

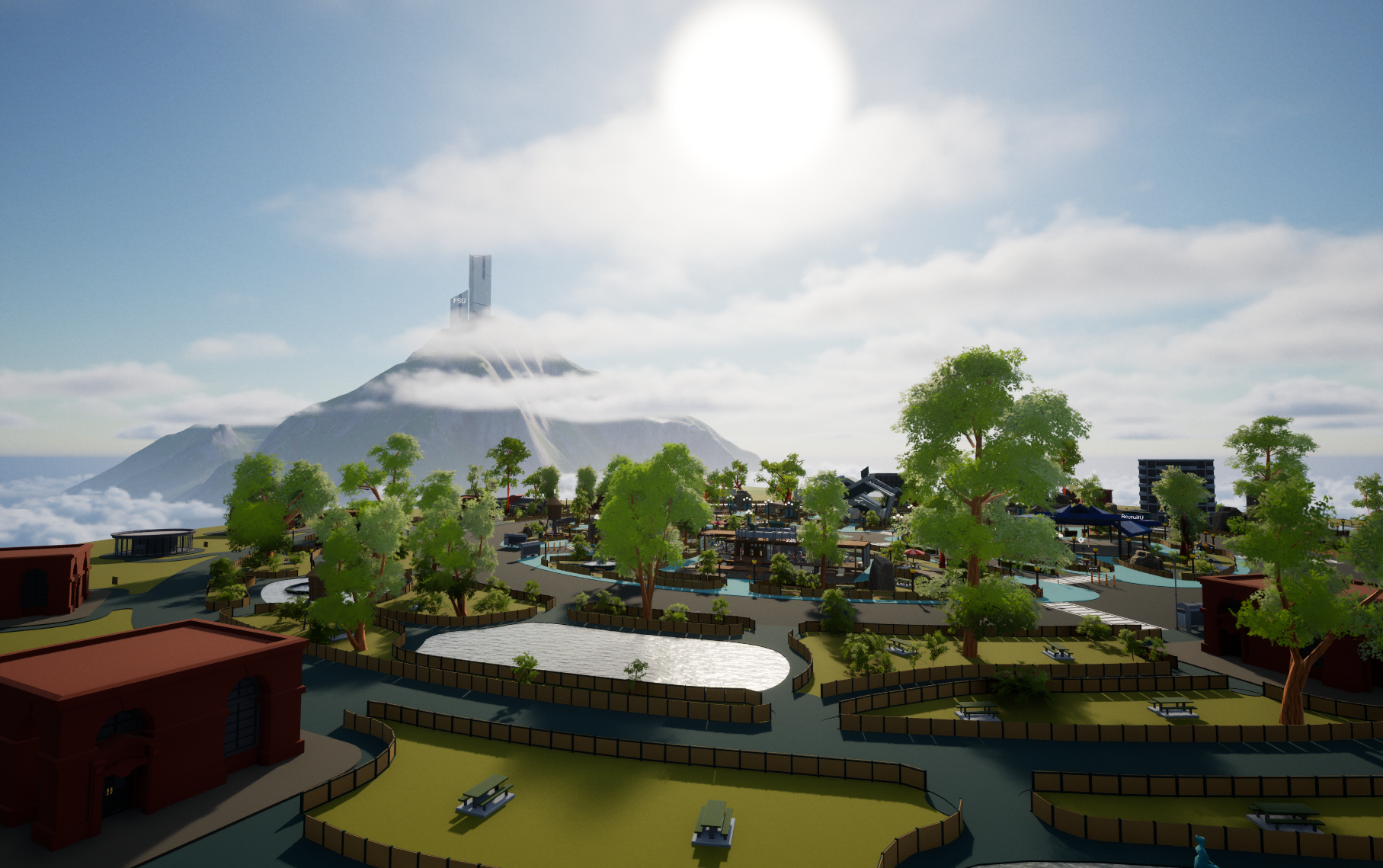

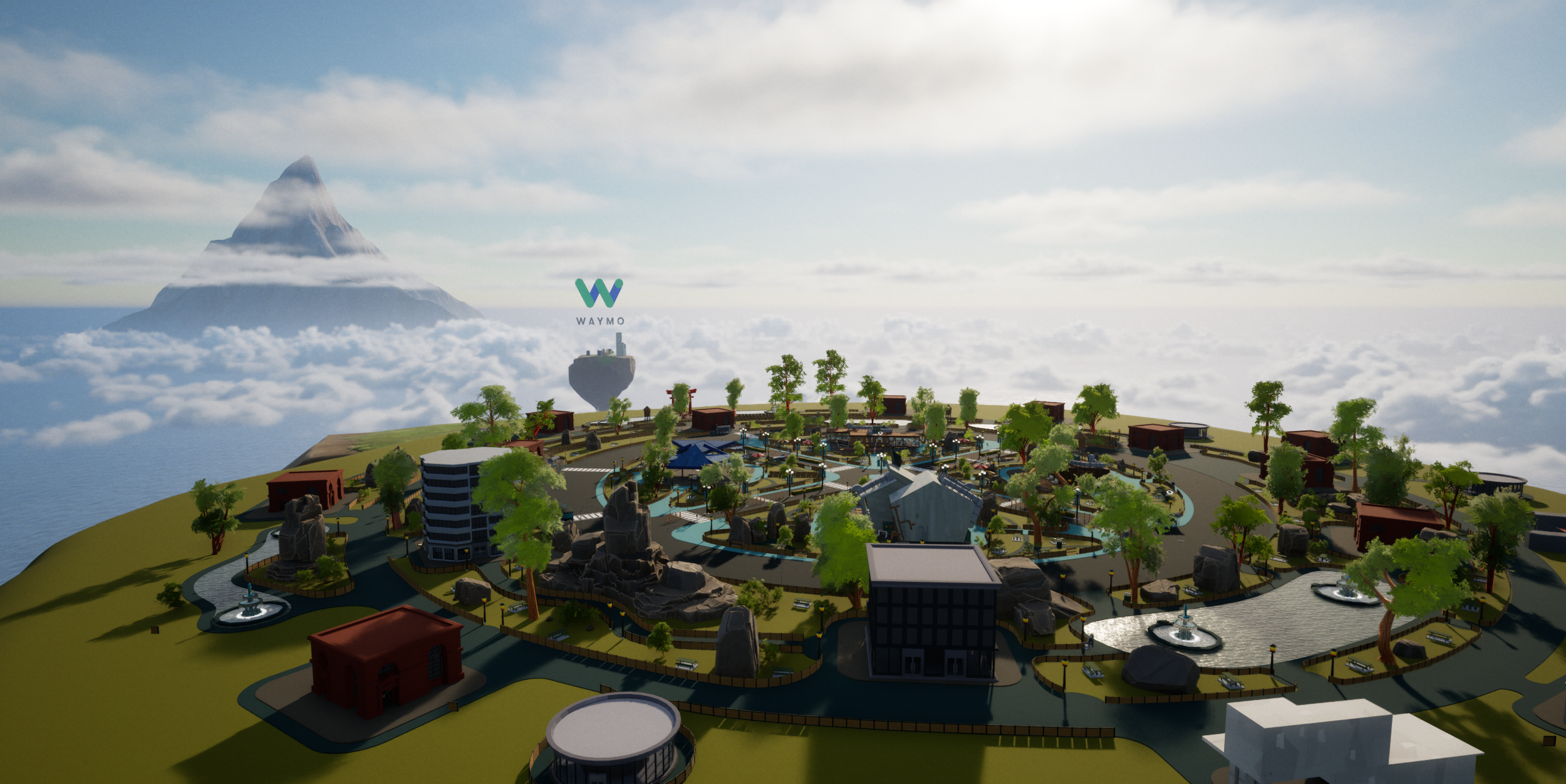

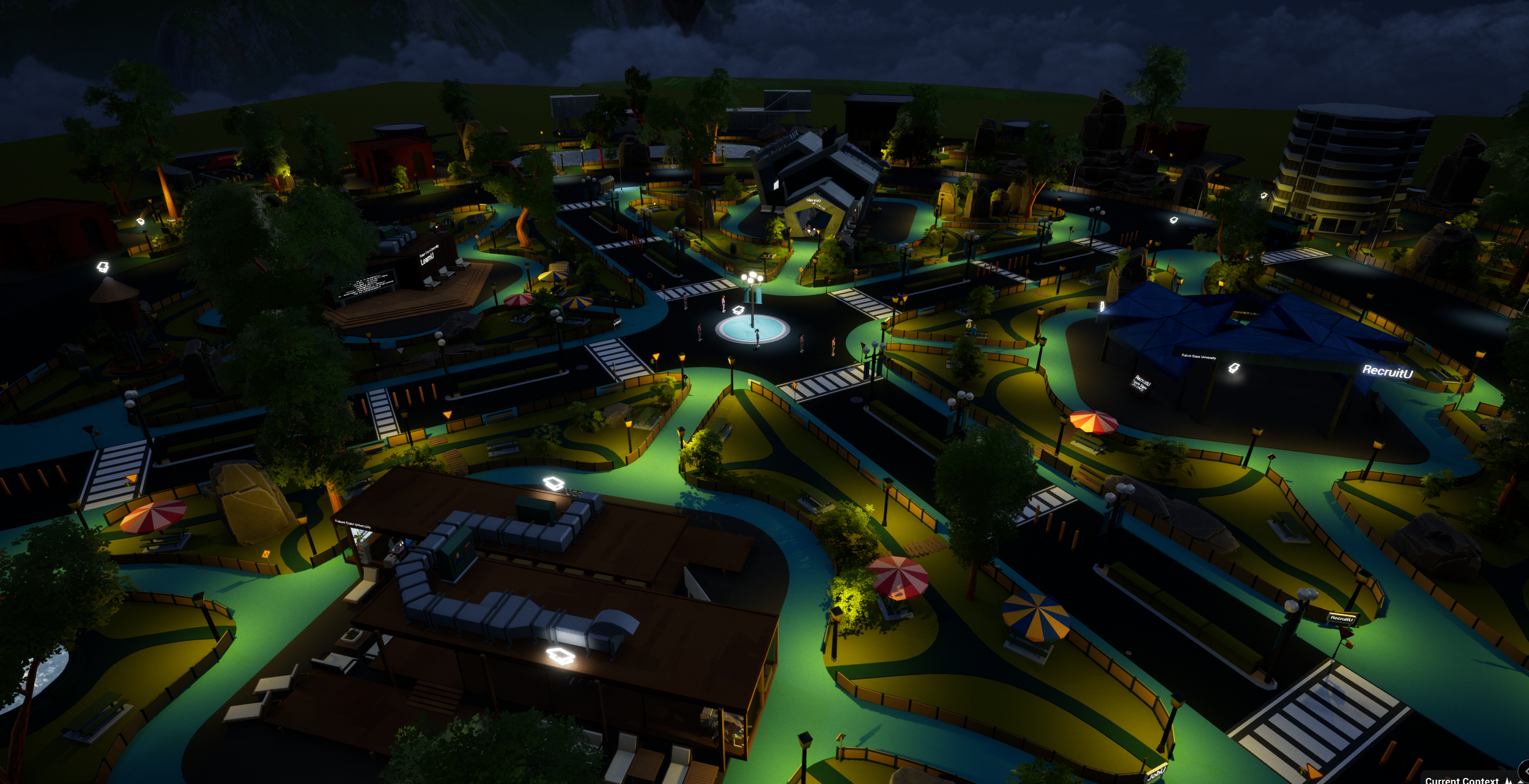

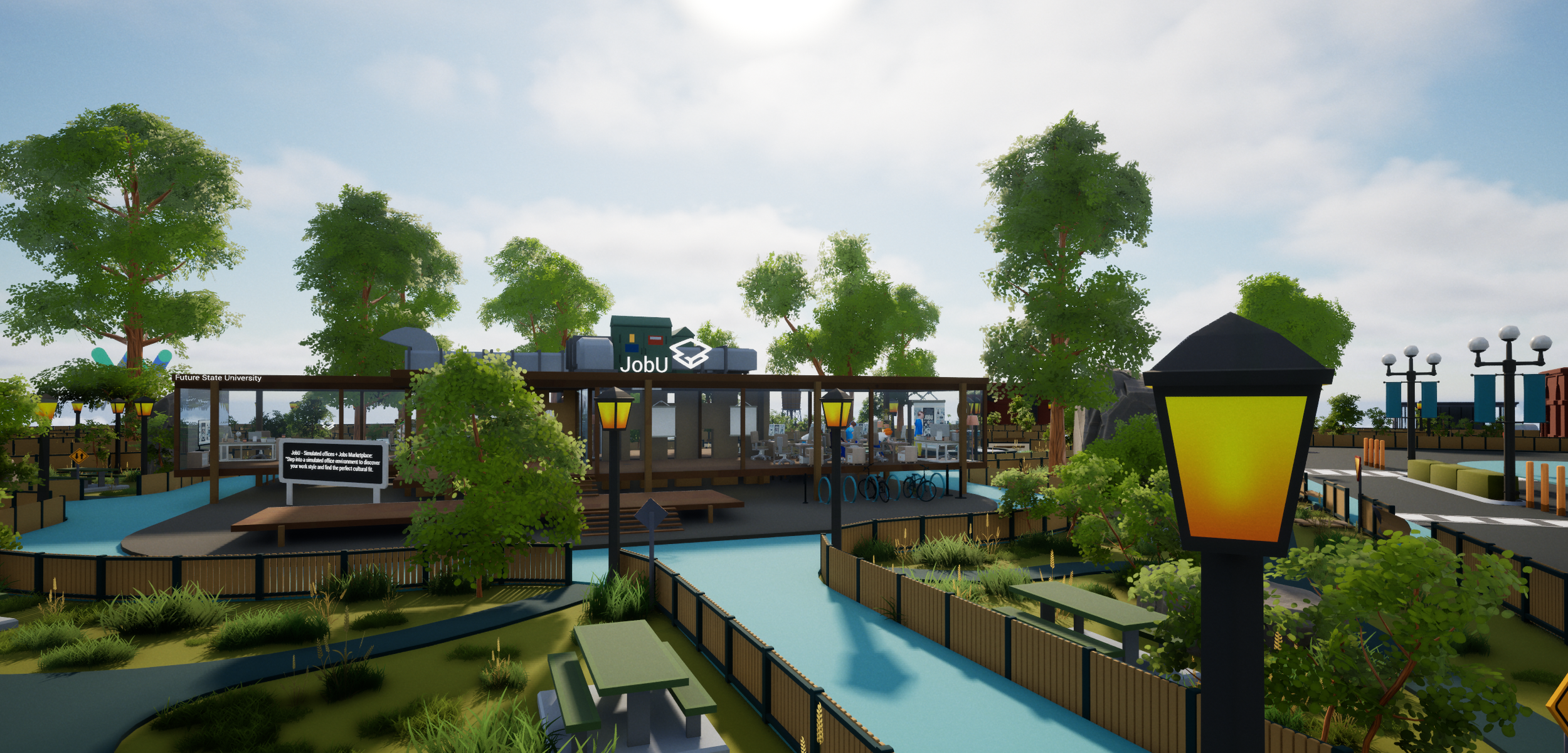

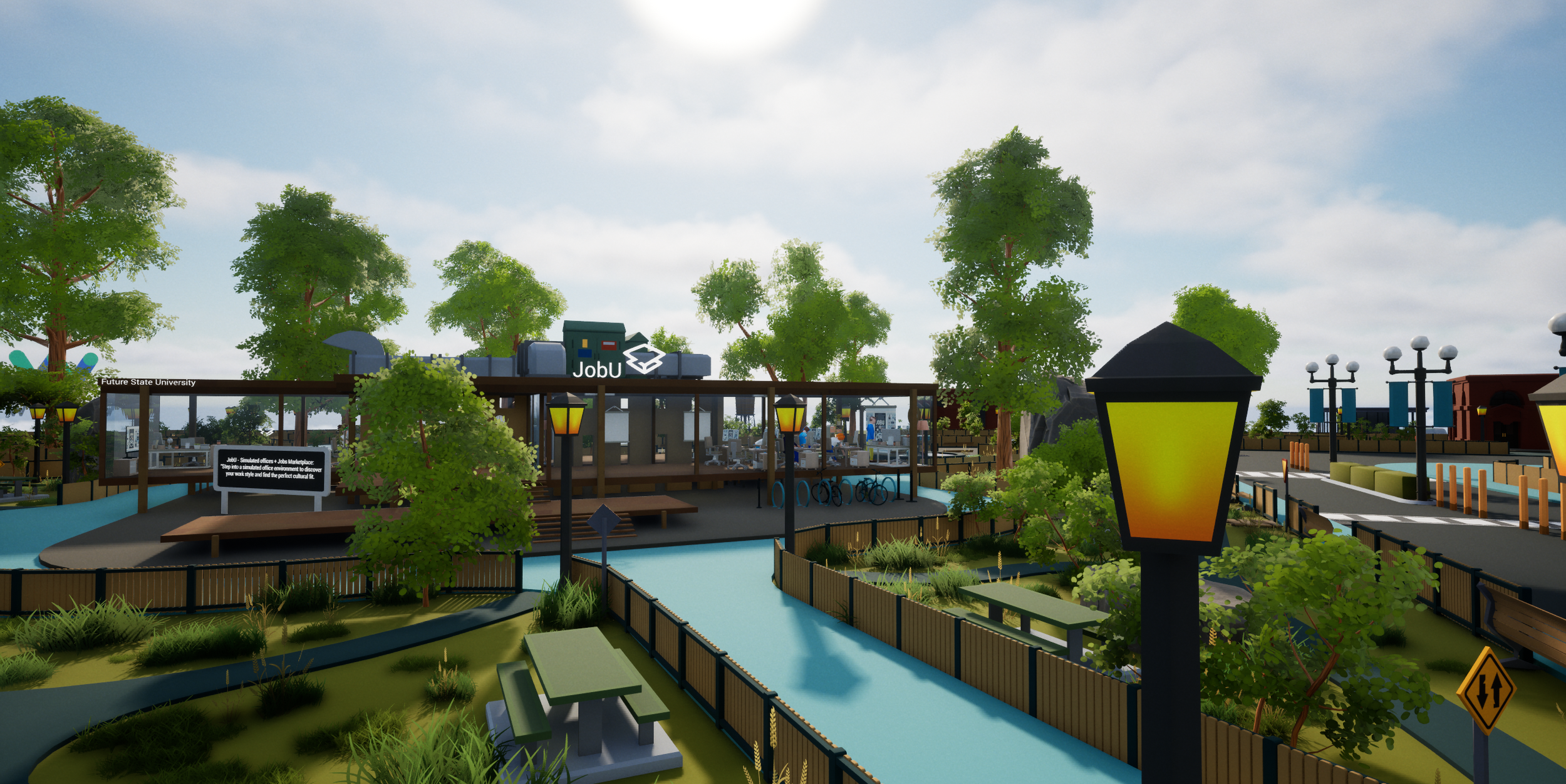

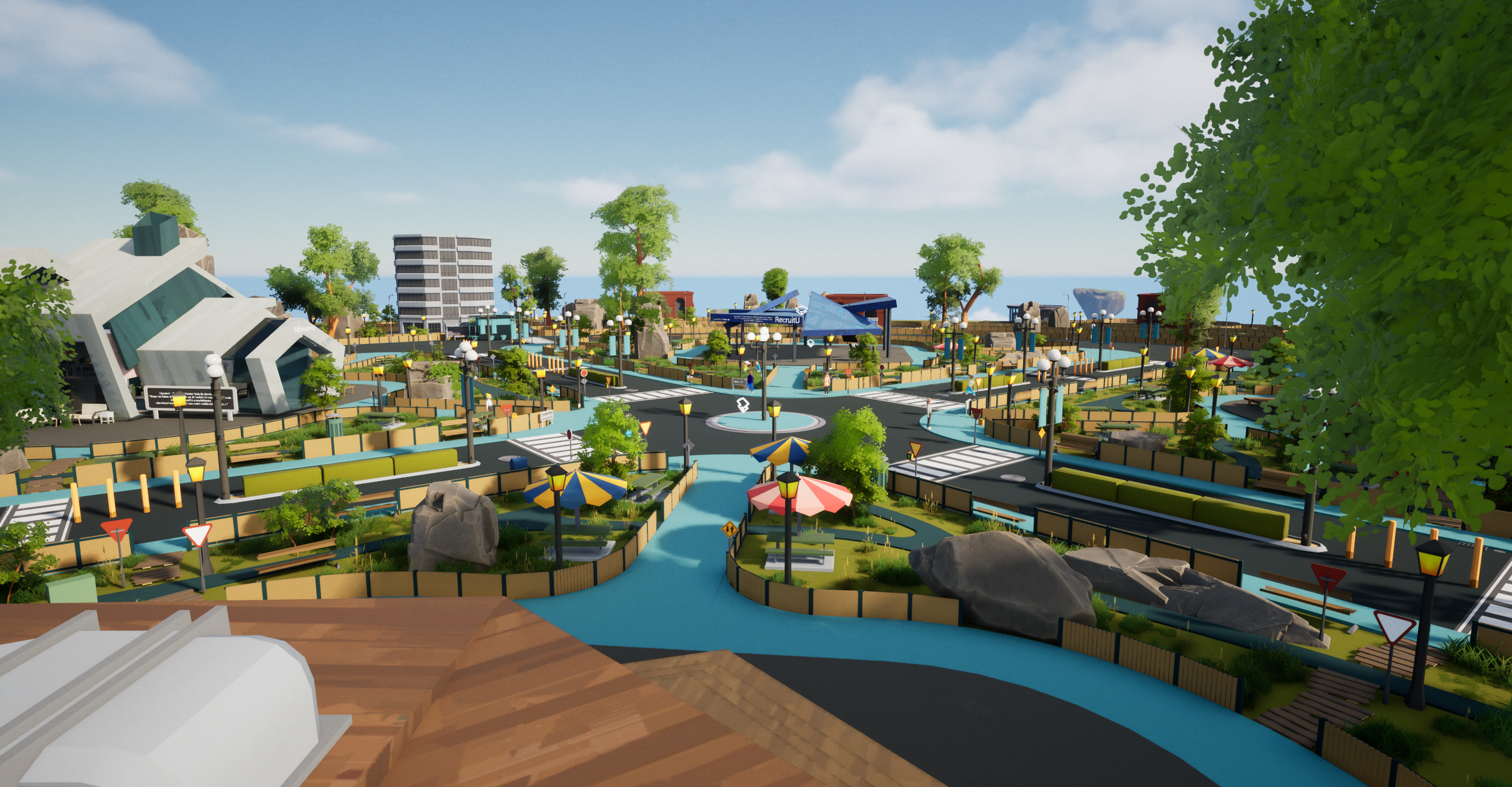

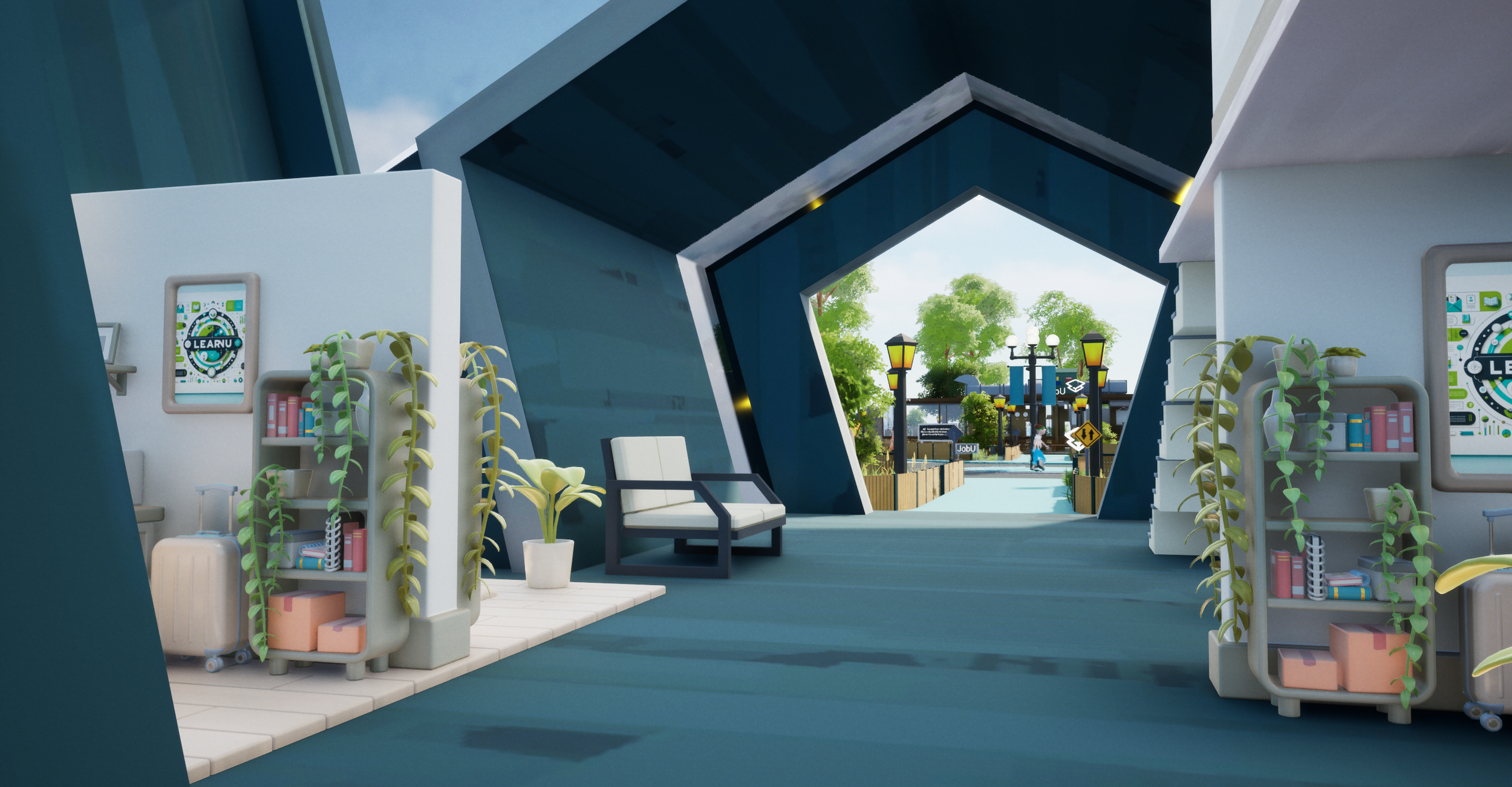

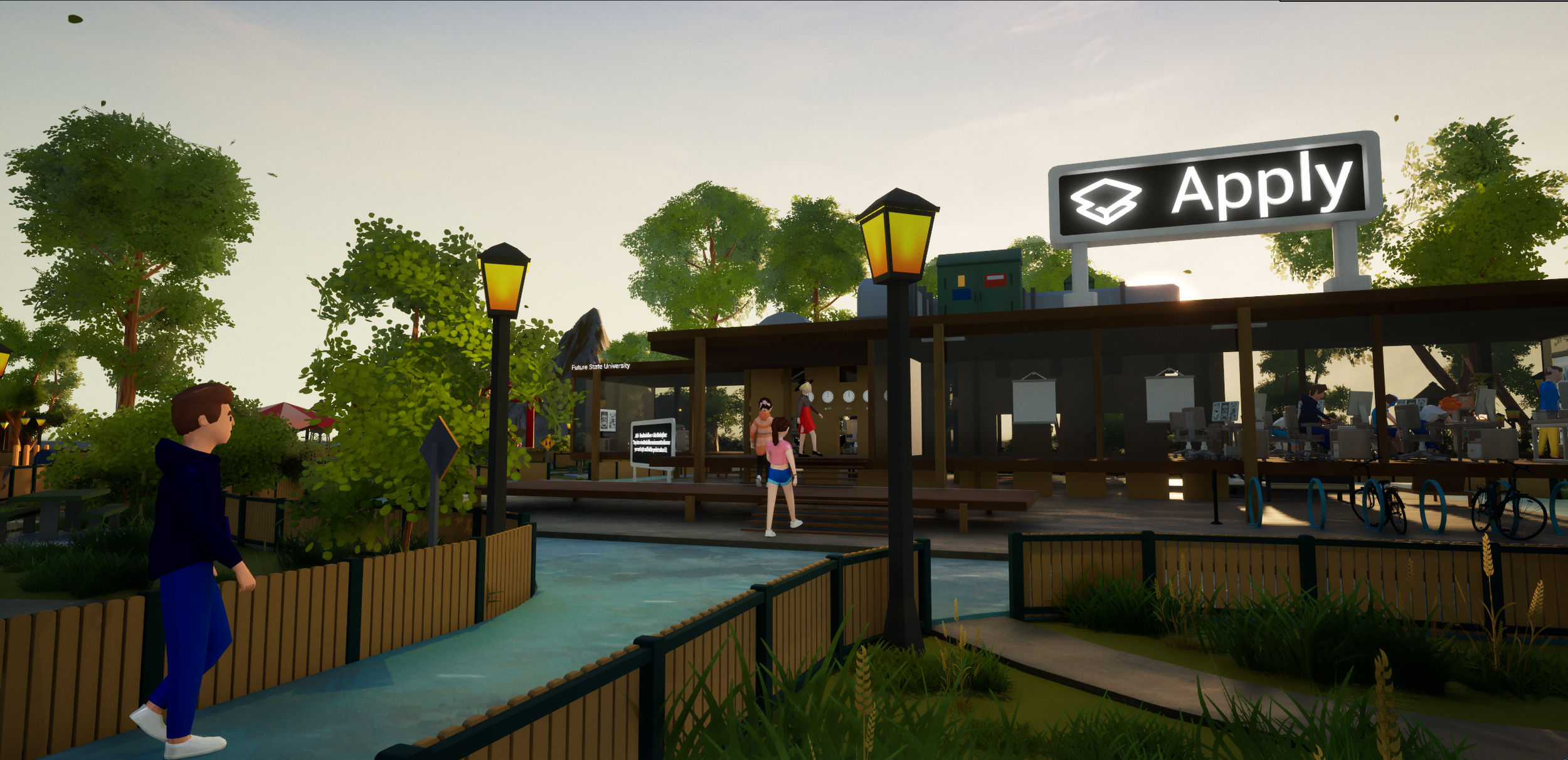

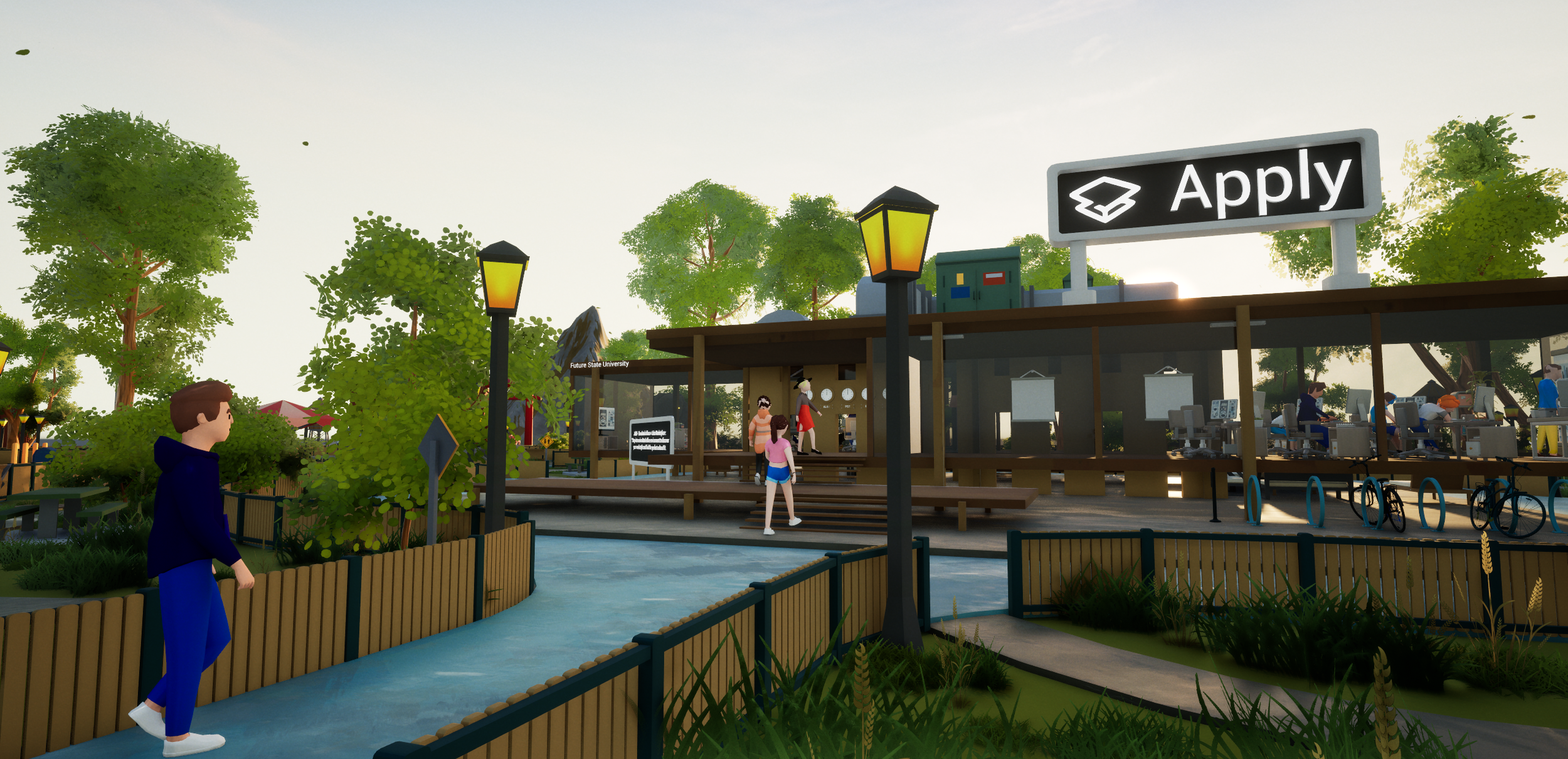

Project: FSU Metaverse MVP & Career Center

Role: Senior Technical Artist & Lead Developer

Tools: Unreal Engine 5, Houdini, Maya, Python, OpenAI API, C++

Timeline: 3.5 Month Rapid Prototype

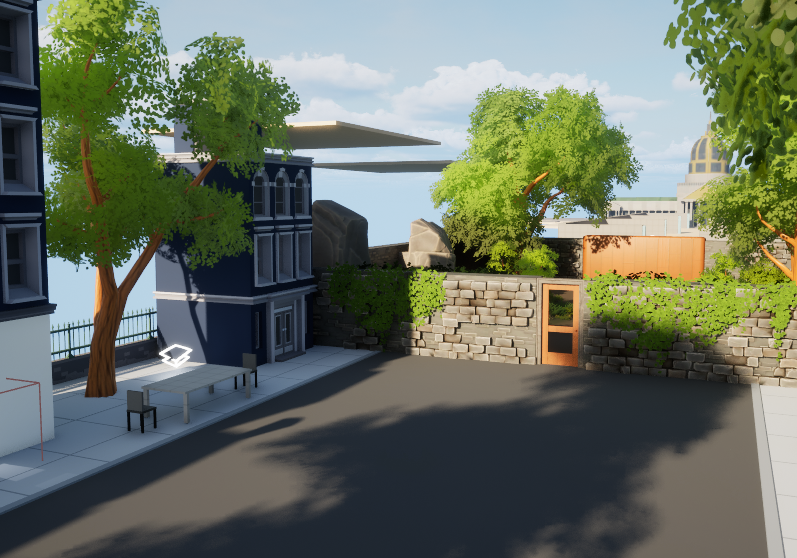

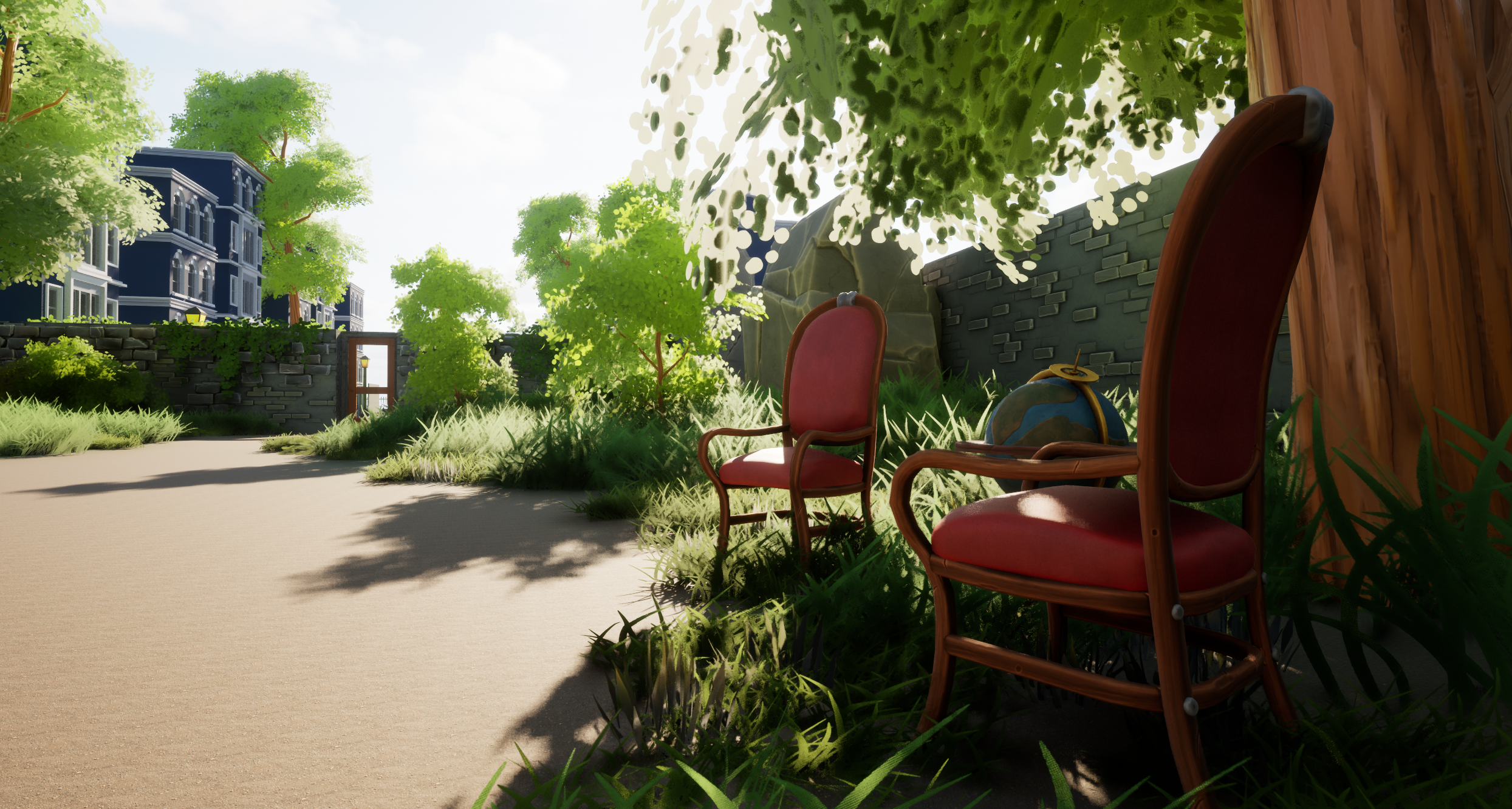

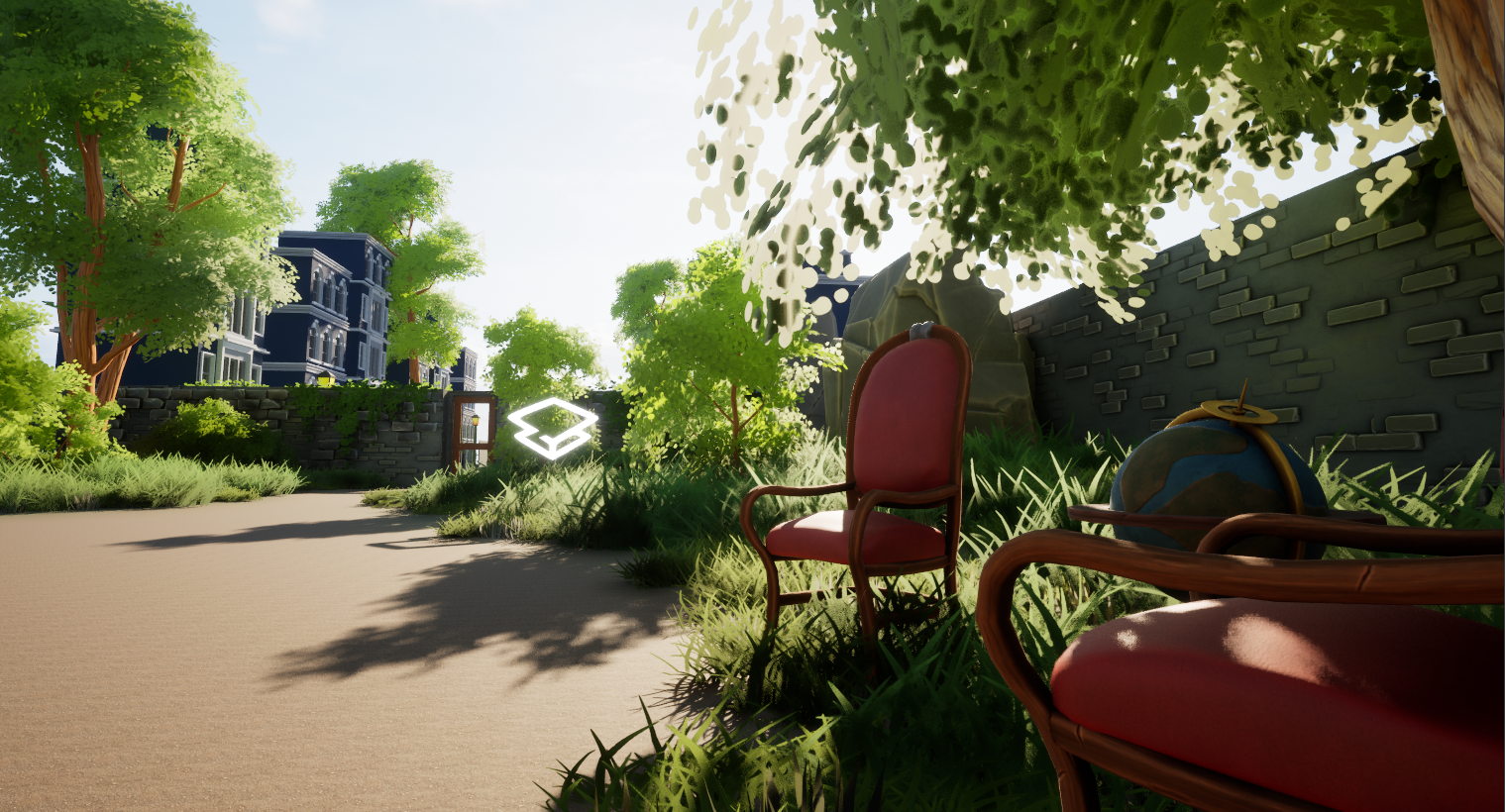

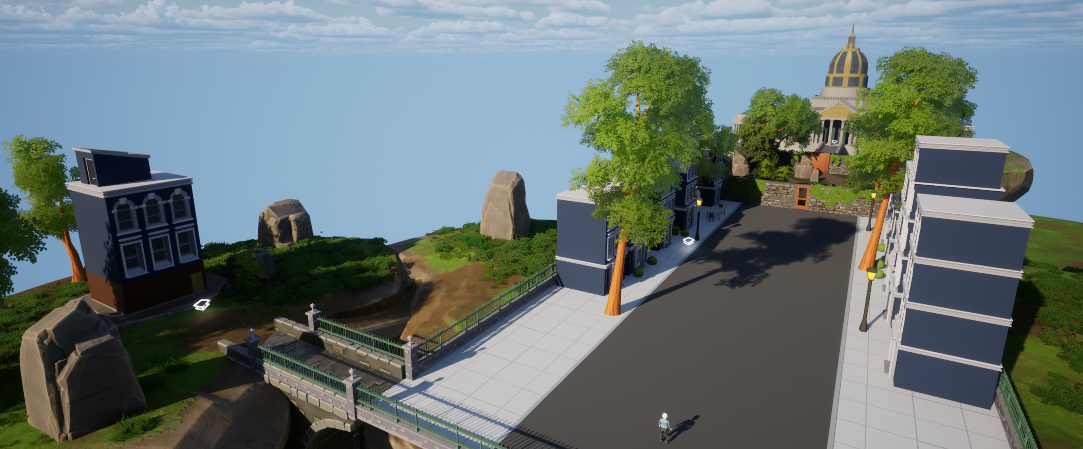

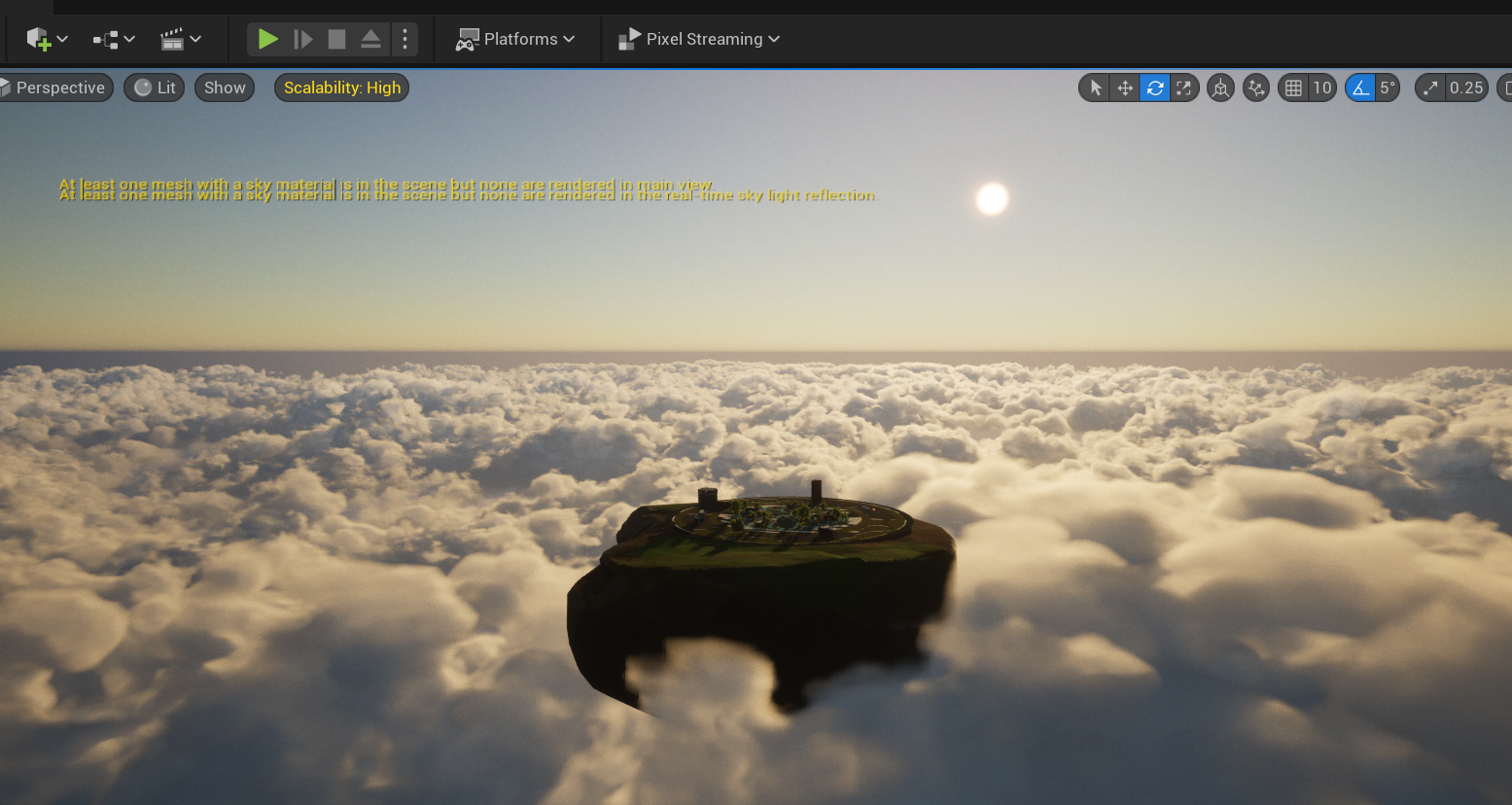

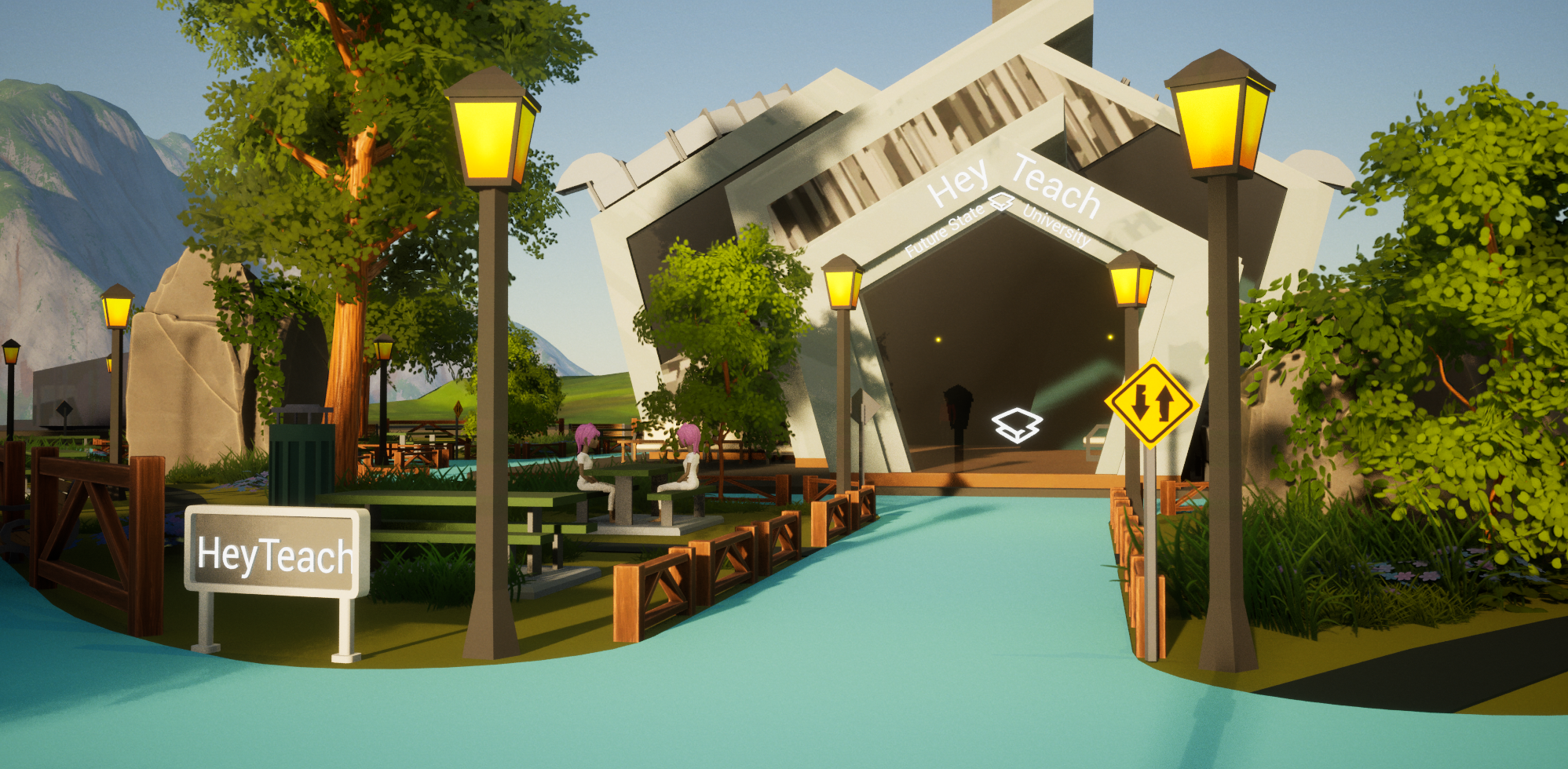

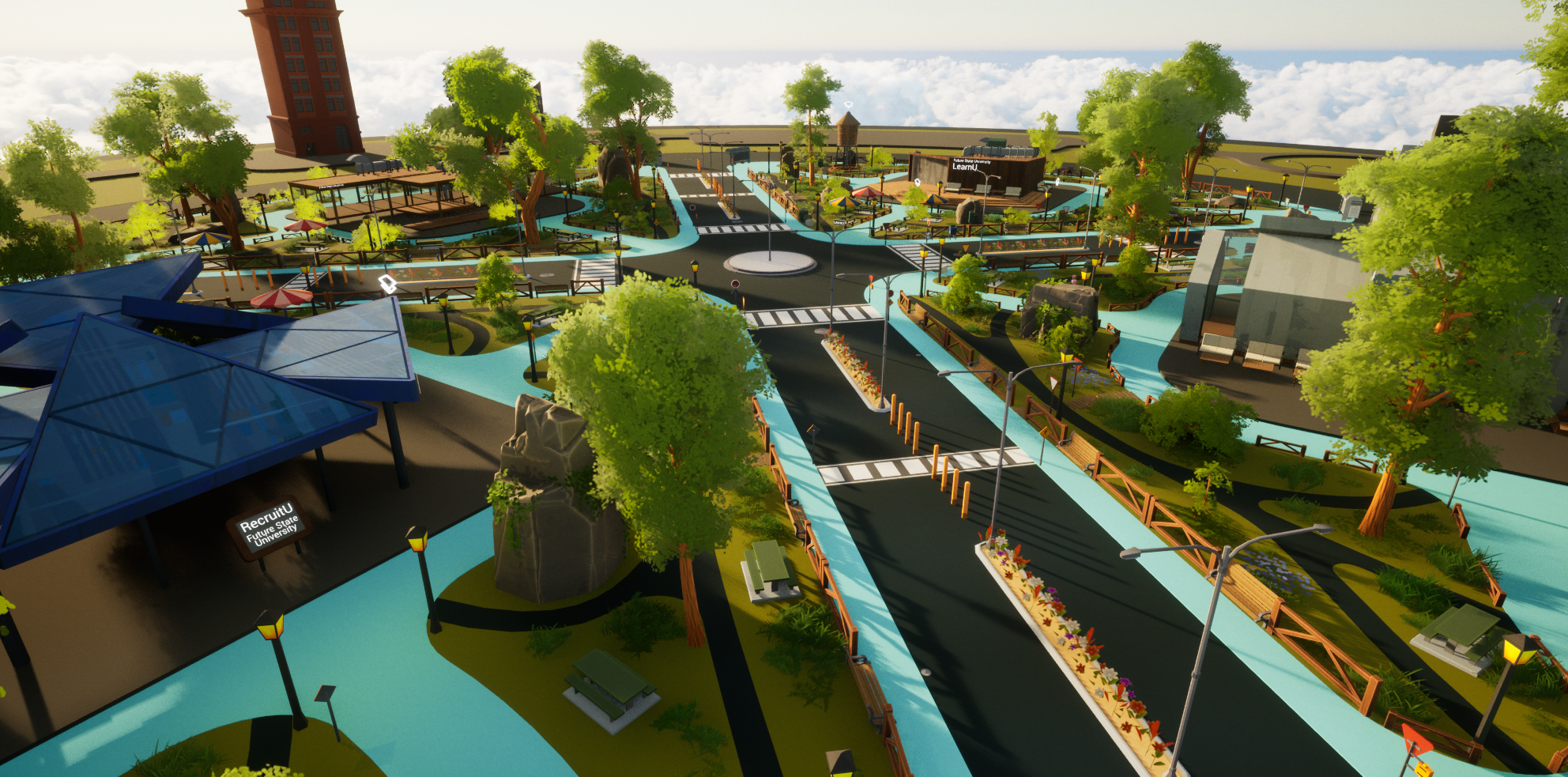

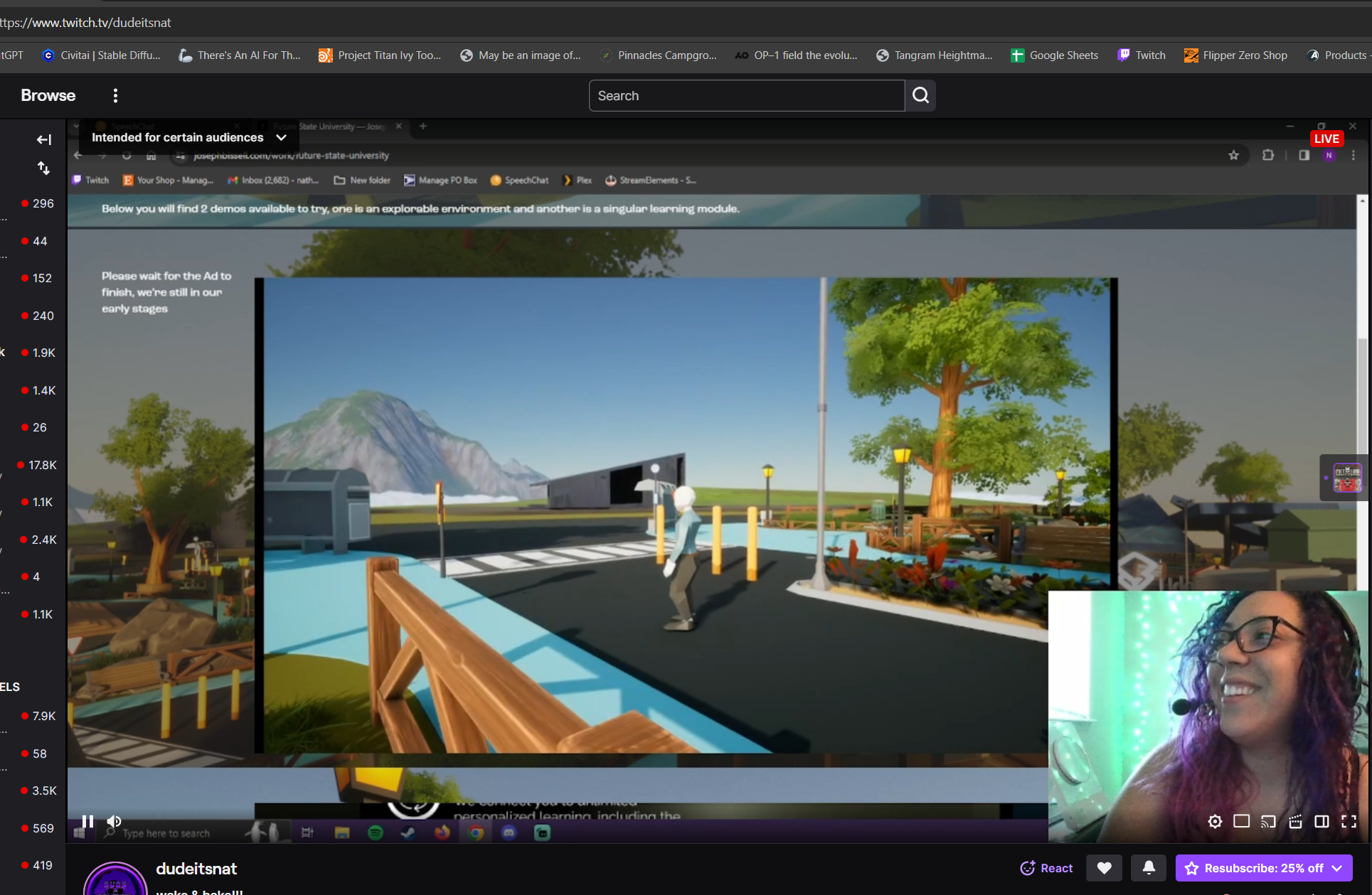

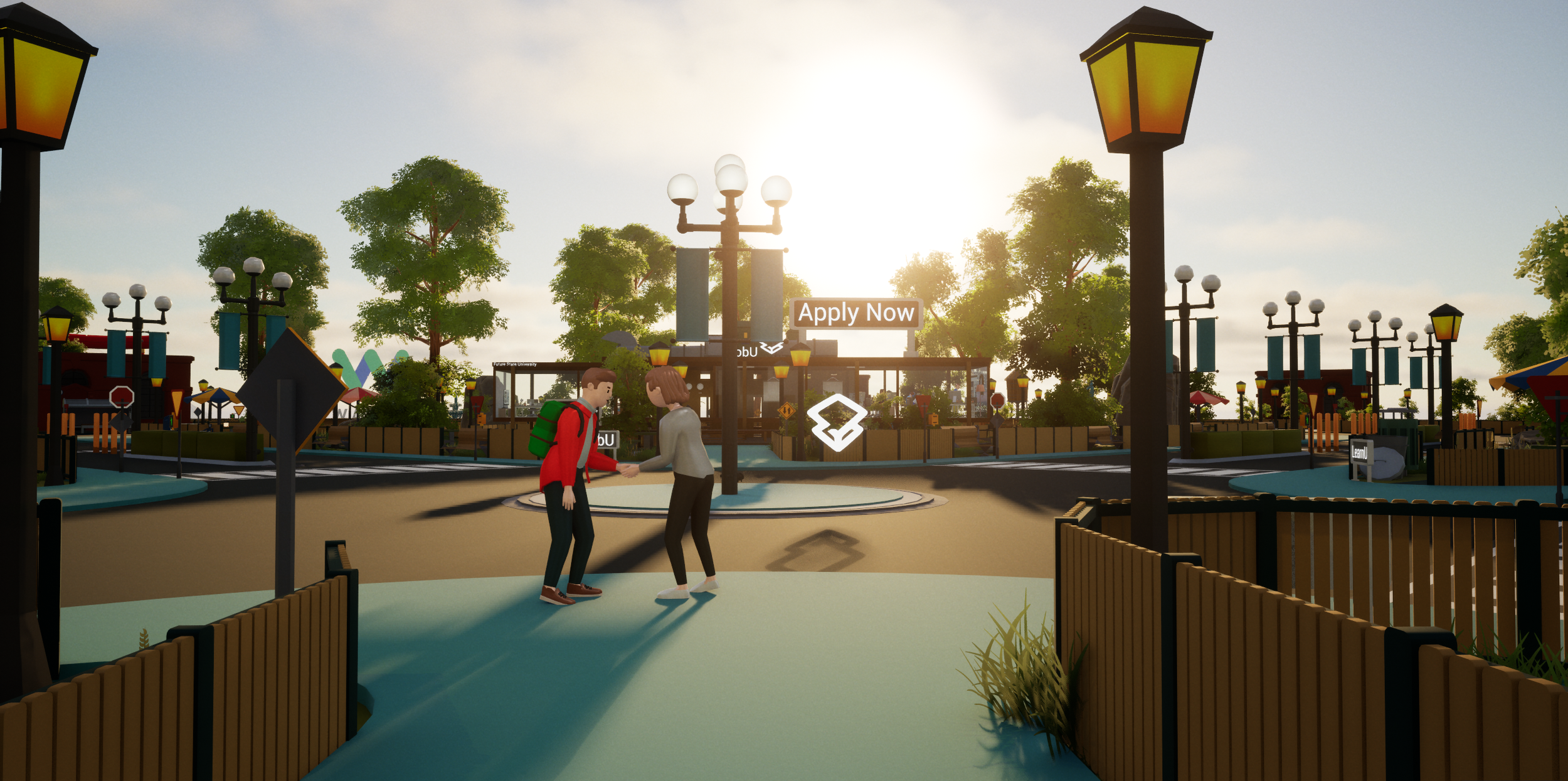

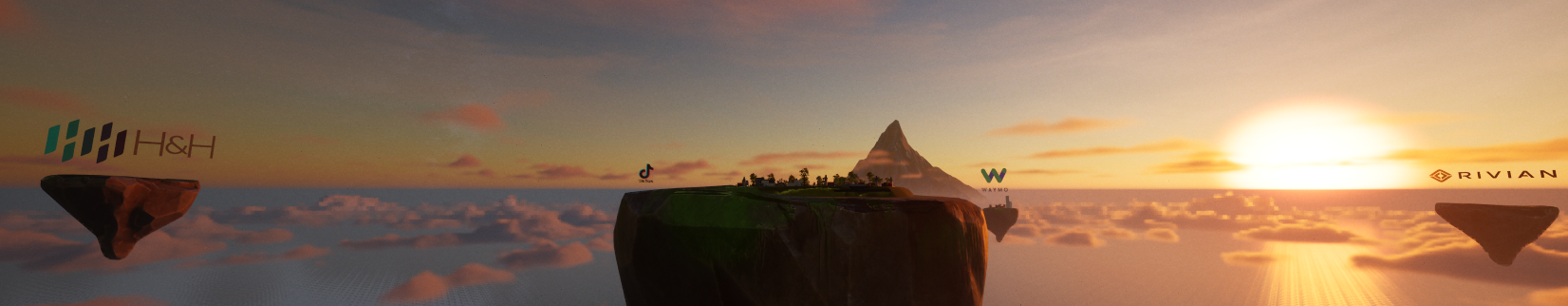

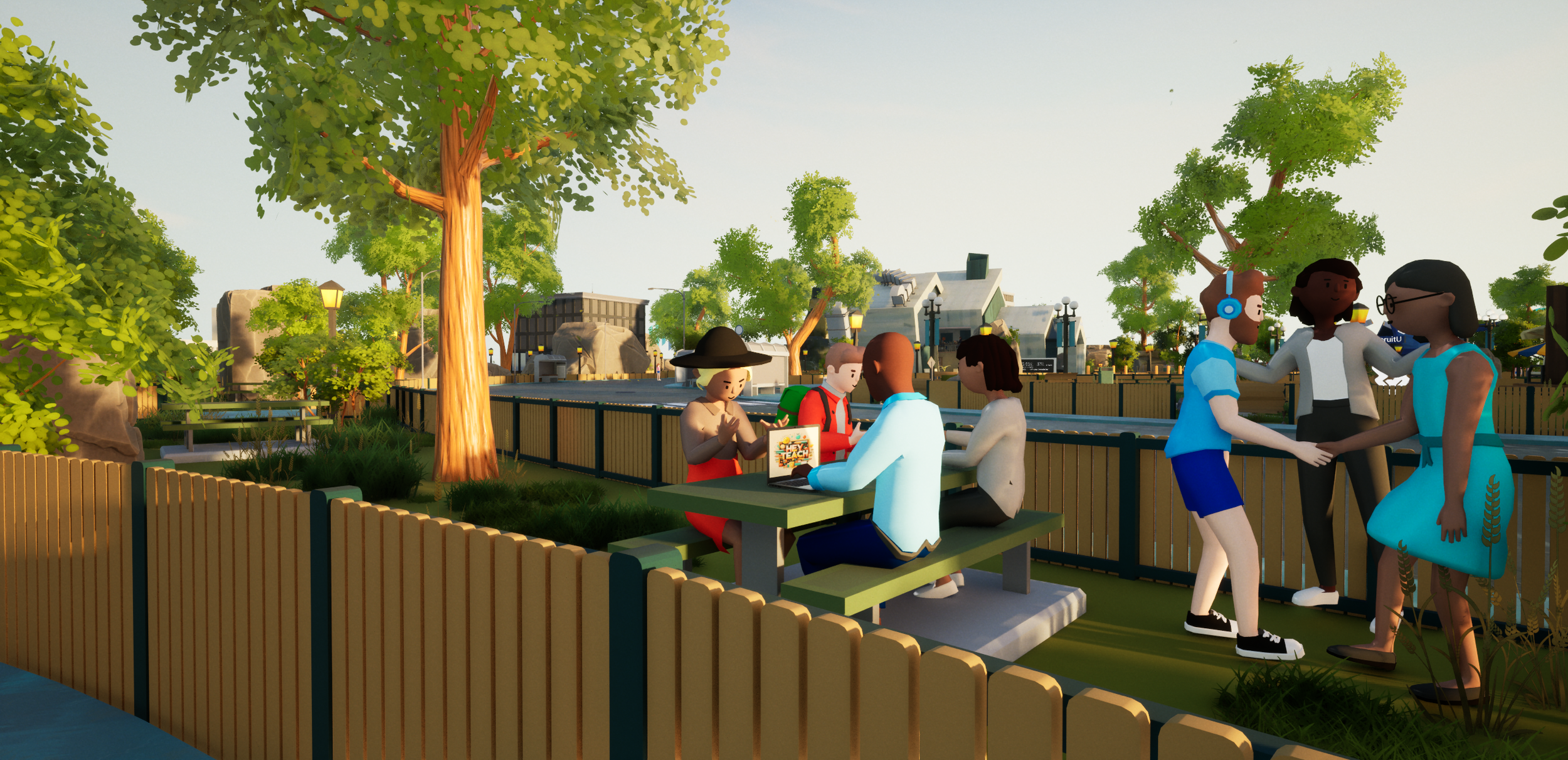

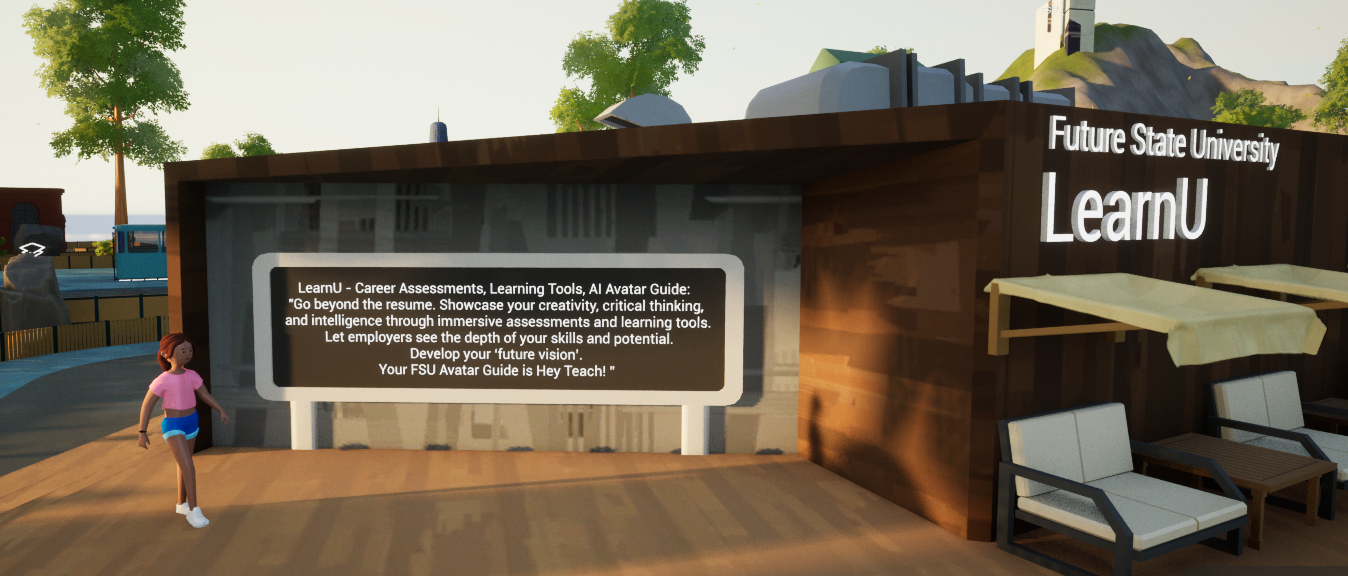

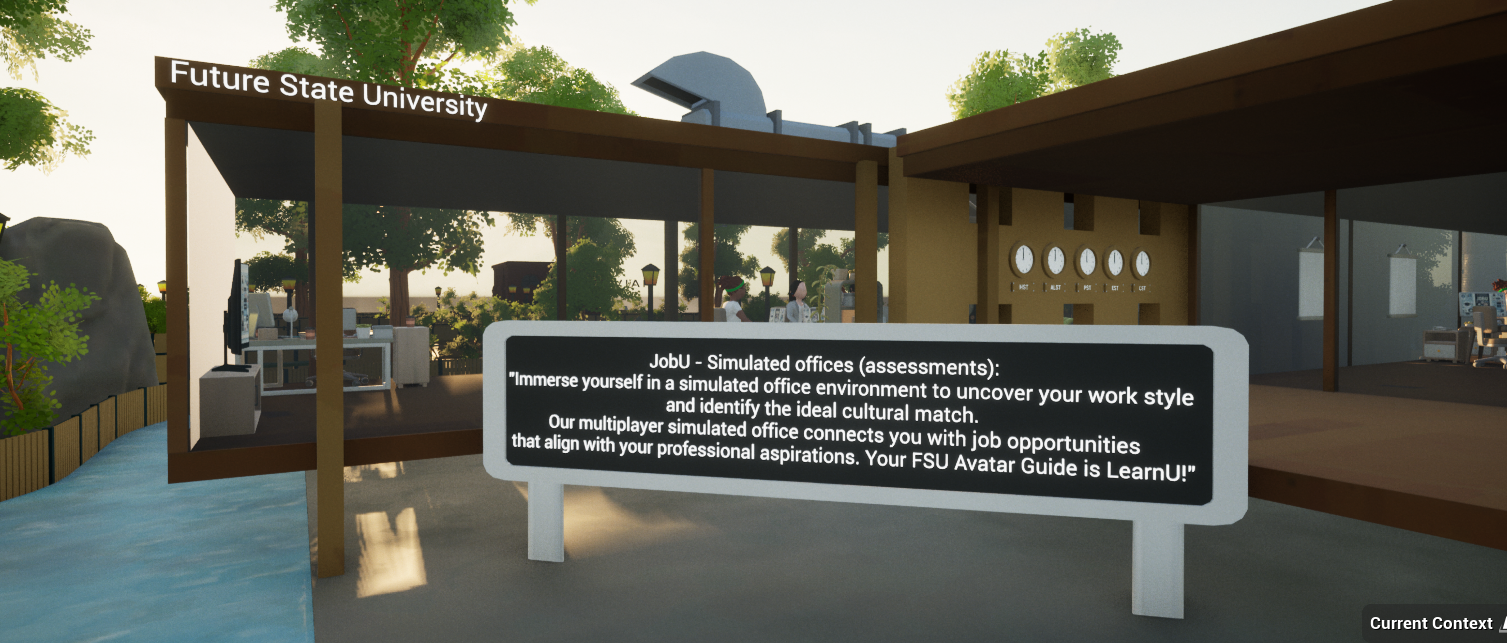

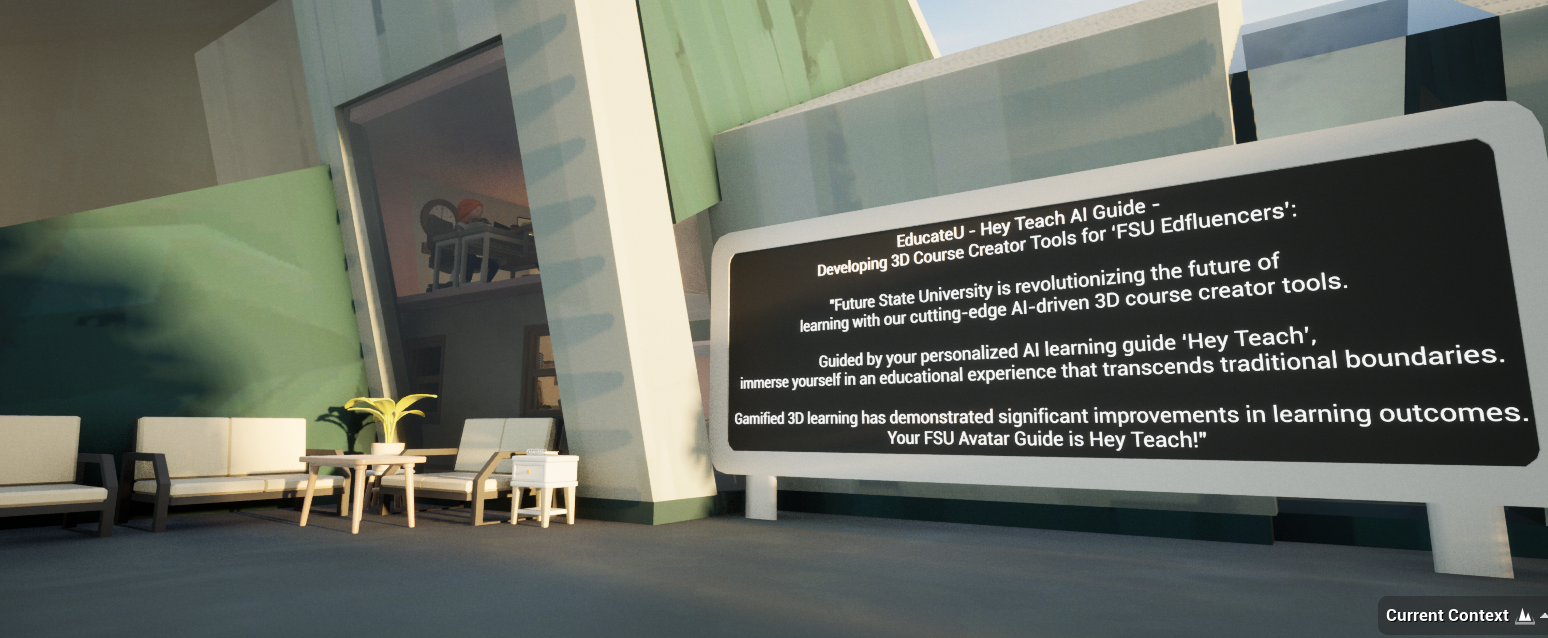

Project Overview: Designed to gamify the career development process for Future State University students, this Metaverse MVP served as an interactive hub for career readiness. As the technical lead, I was responsible for architecting the world-building pipeline, implementing AI-driven gameplay systems, and delivering cinematic content under an extremely aggressive timeline.

Key Technical Achievements

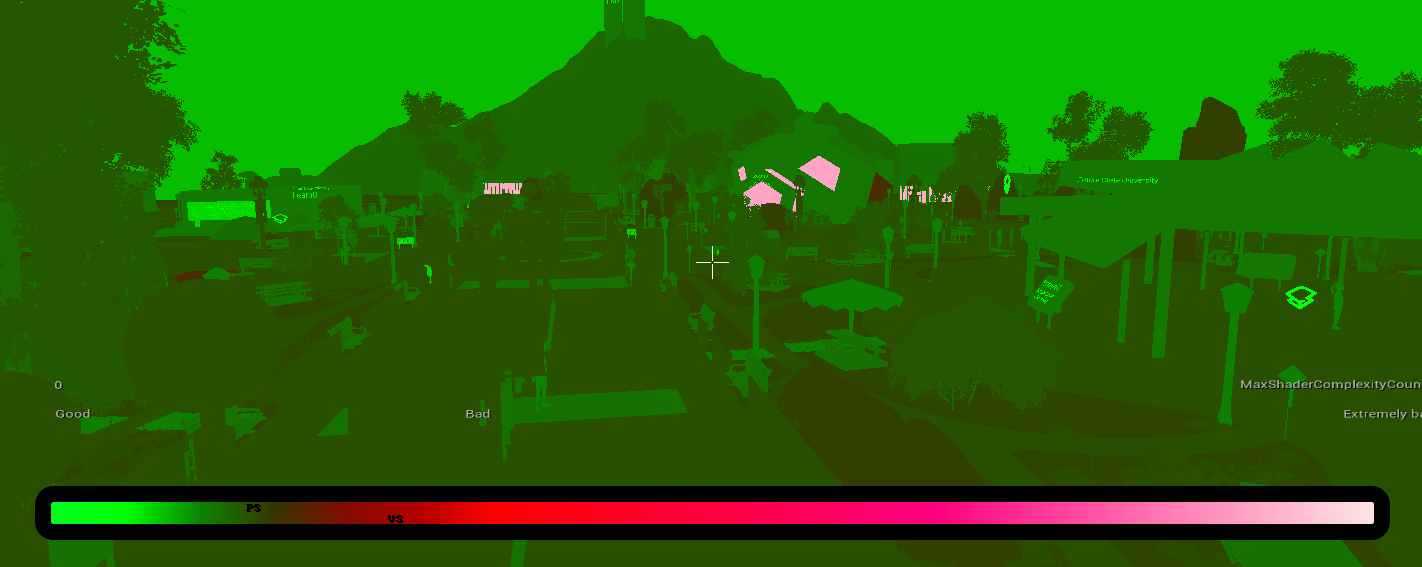

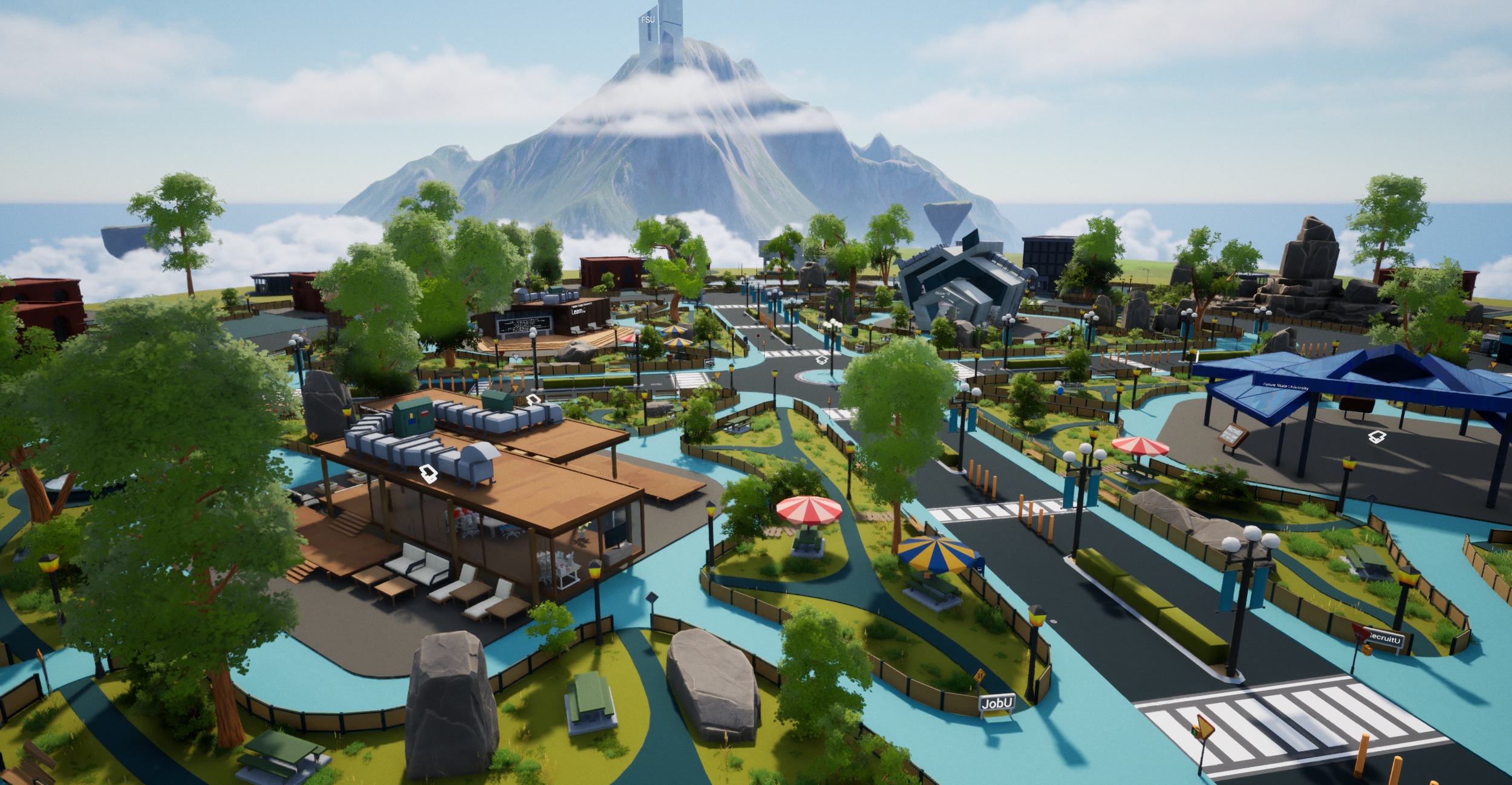

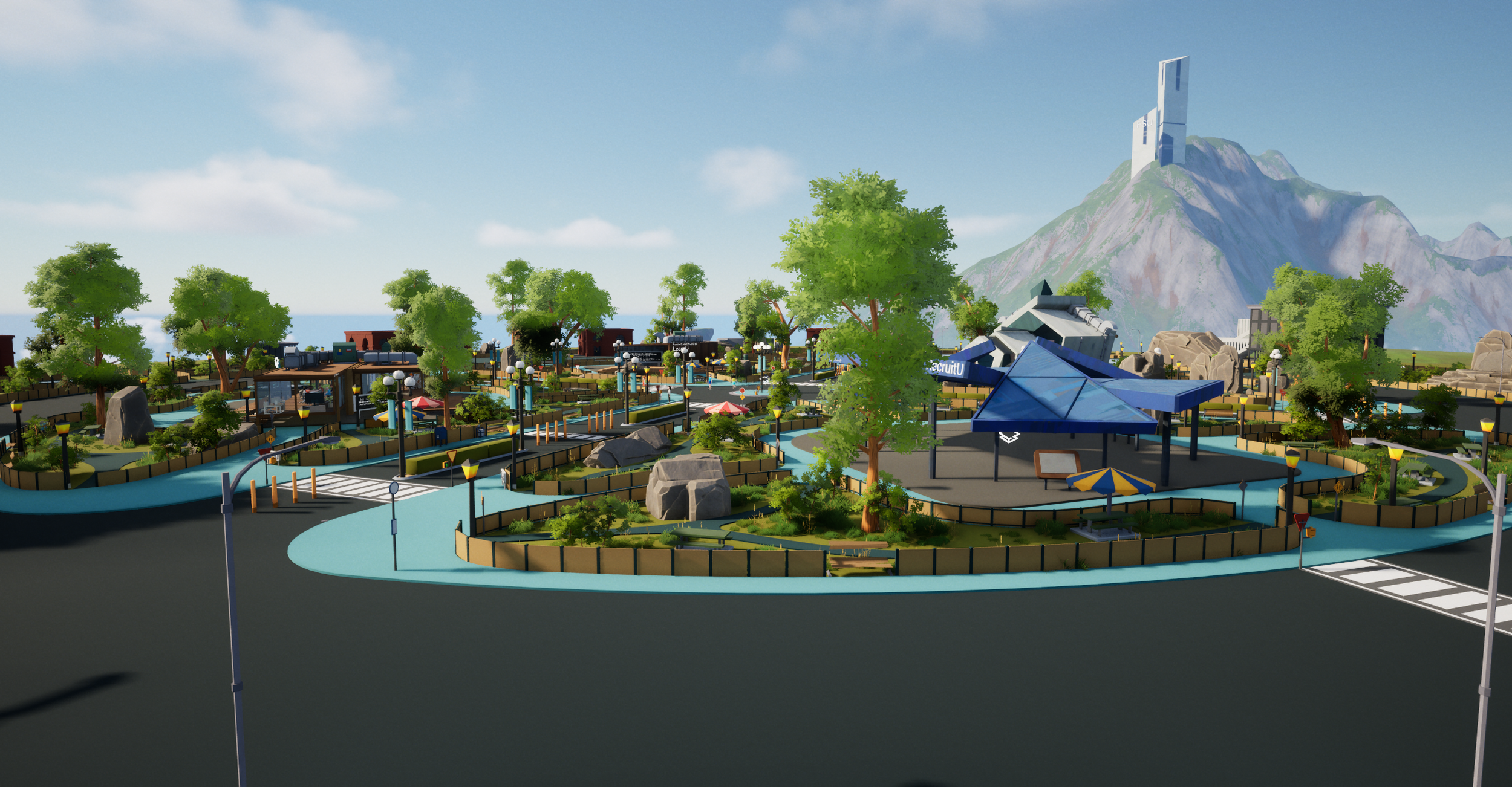

Procedural World Building (Houdini):

Abandoned manual placement in favor of custom procedural tools built in Houdini.

Integrated real-world civil engineering drafting standards directly into the generation logic, ensuring that the campus layout was not only visually cohesive but architecturally grounded.

This pipeline allowed for rapid iteration of the environment layout without sacrificing detail.

AI-Powered Career Simulation:

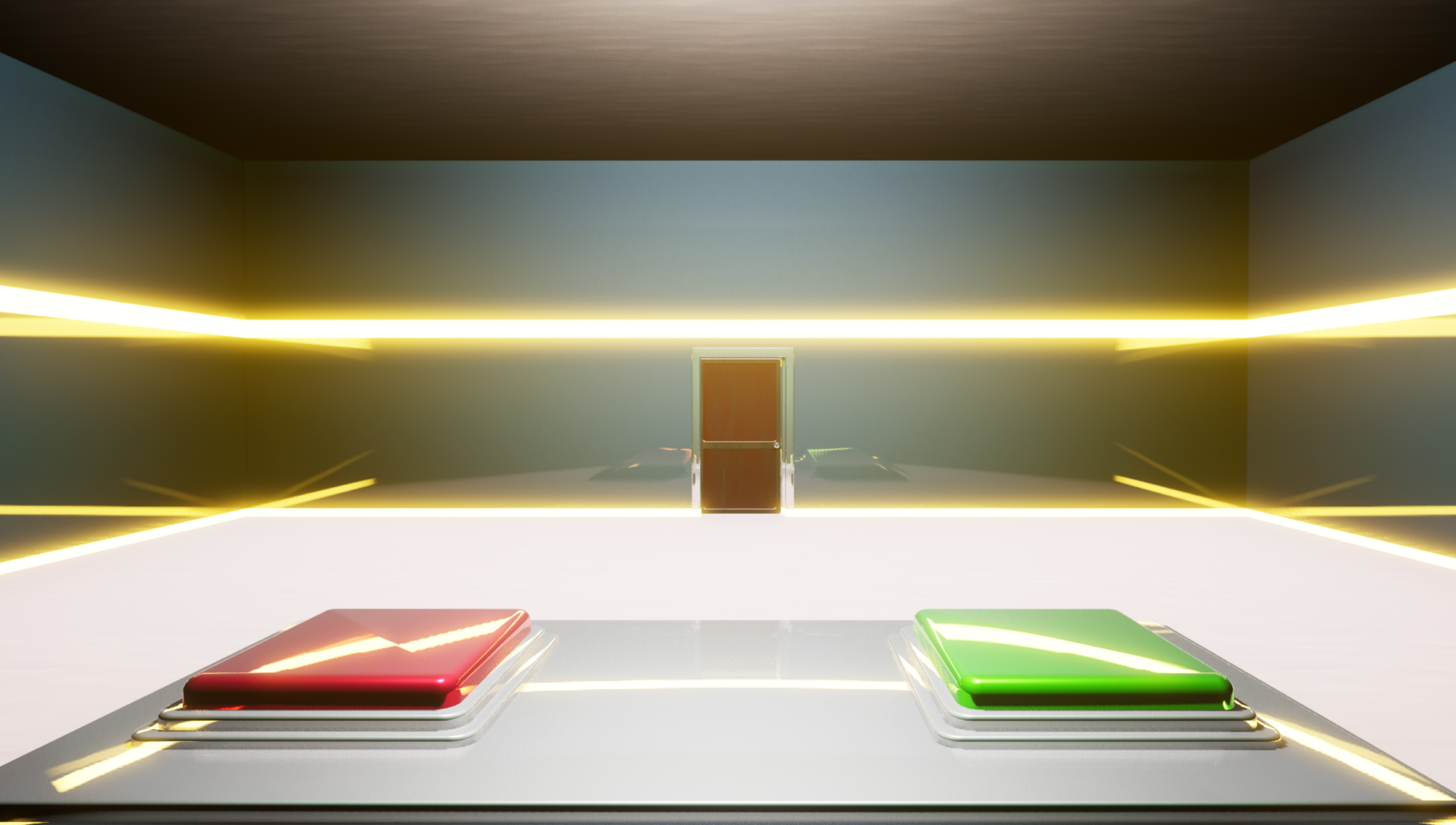

Mock Interview Module: Developed a system where users upload their actual resumes and participate in a voice-based interview with an AI NPC. The system analyzes the audio to score the user's performance against specific job descriptions.

Anti-Cheat Implementation: Engineered logic to detect "AI-scraping" vs. genuine human responses, prioritizing audio analysis to verify the authenticity of the user's soft skills.

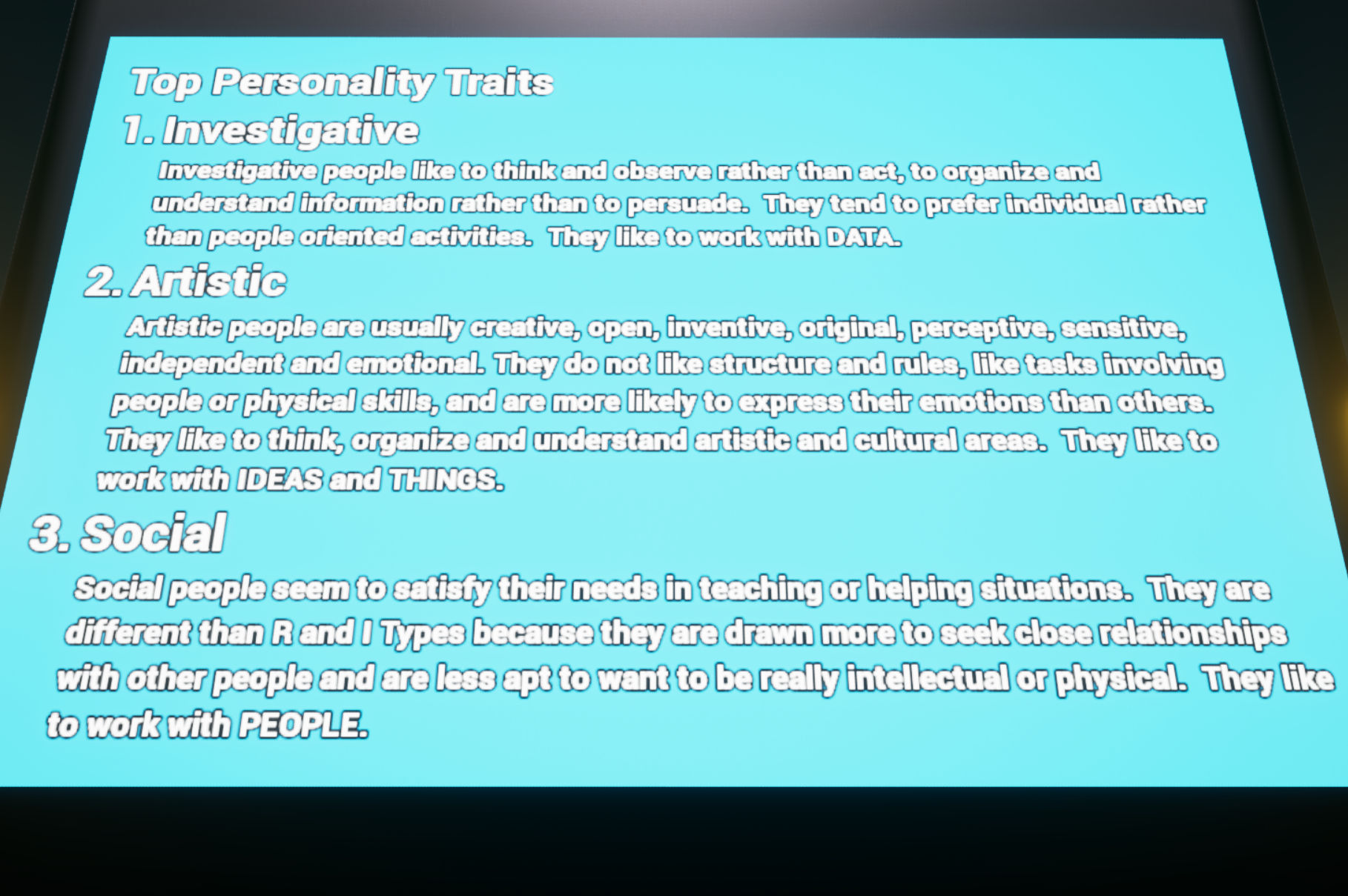

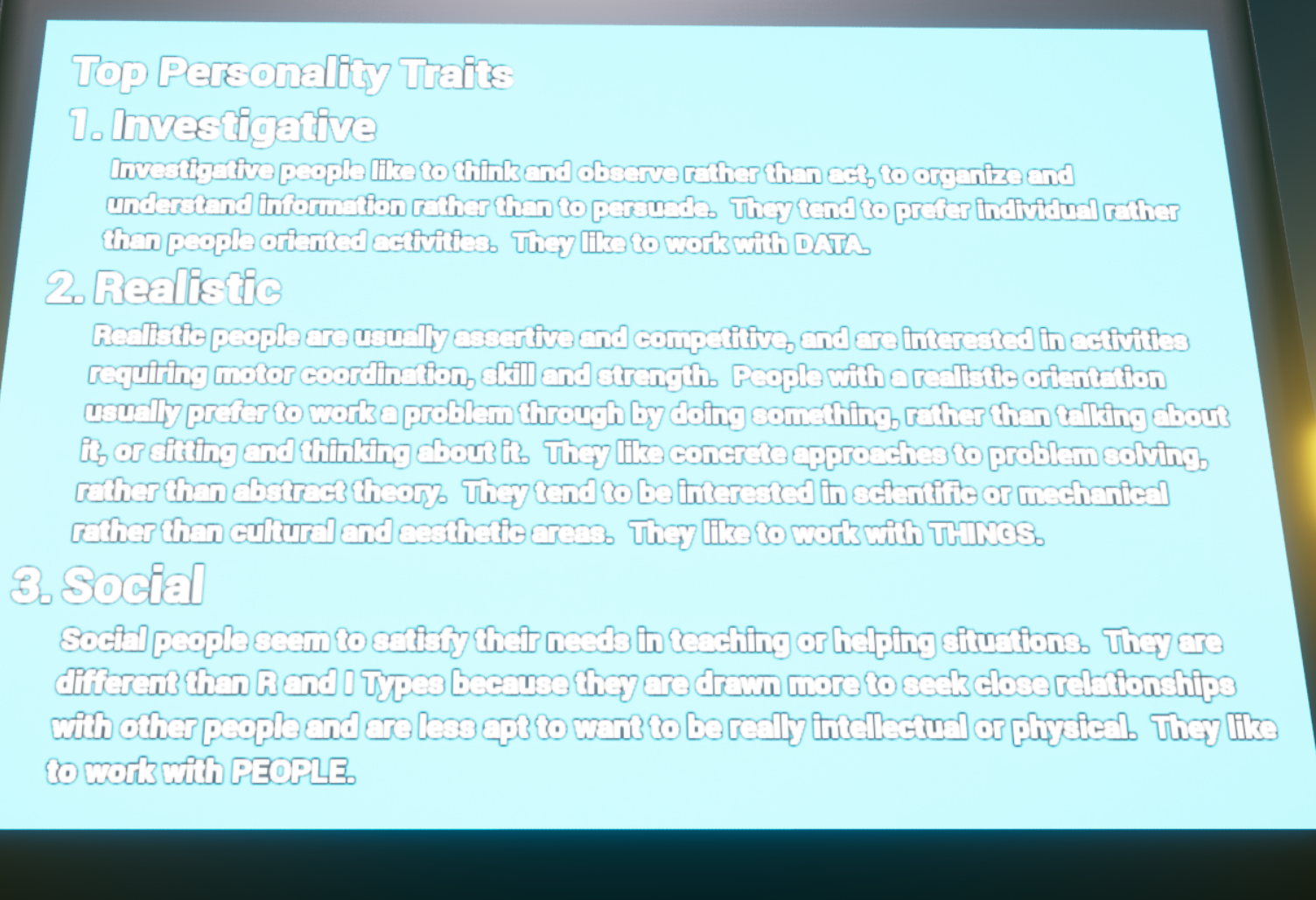

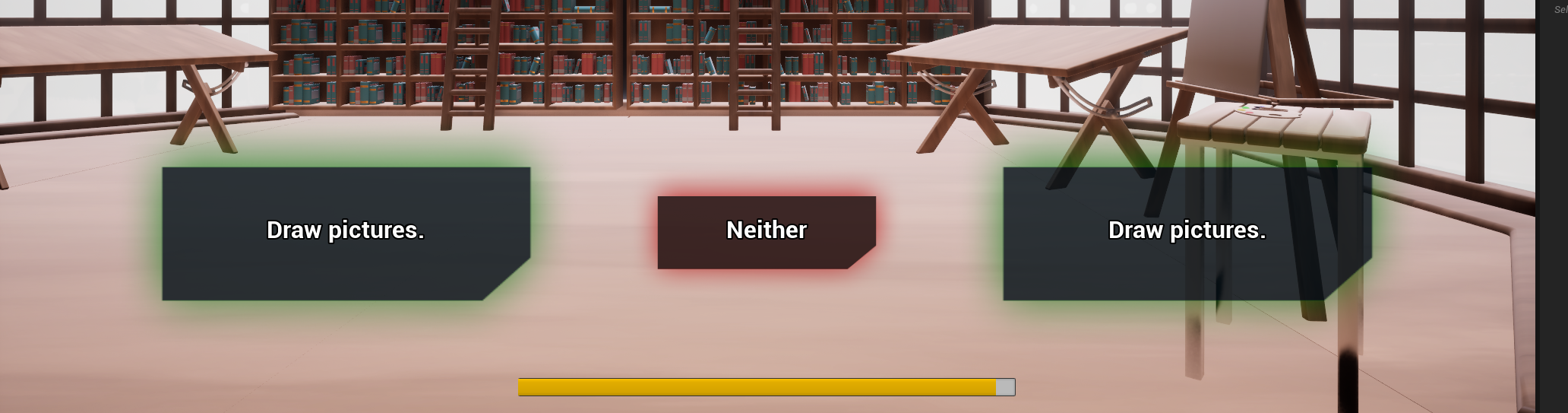

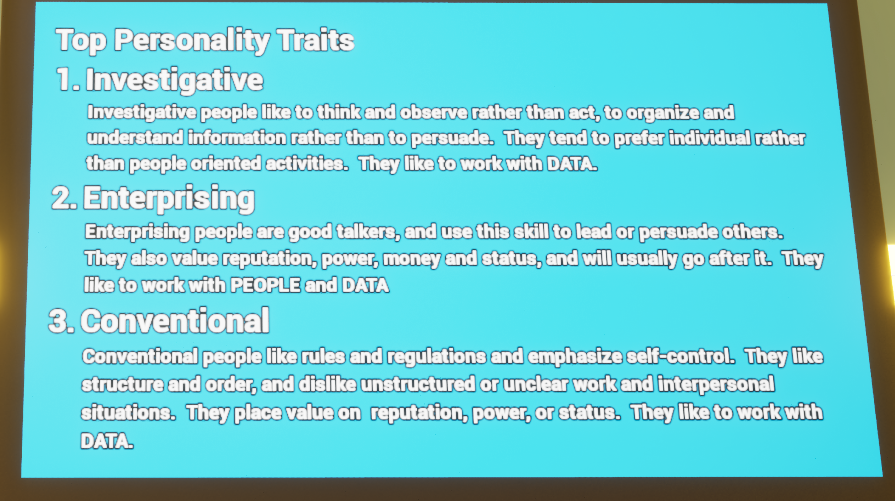

Holland Code Assessment: Built a visual-first multiple-choice test to profile student career interests, mapping results to potential job paths within the world.

Motion Capture & Cinematics:

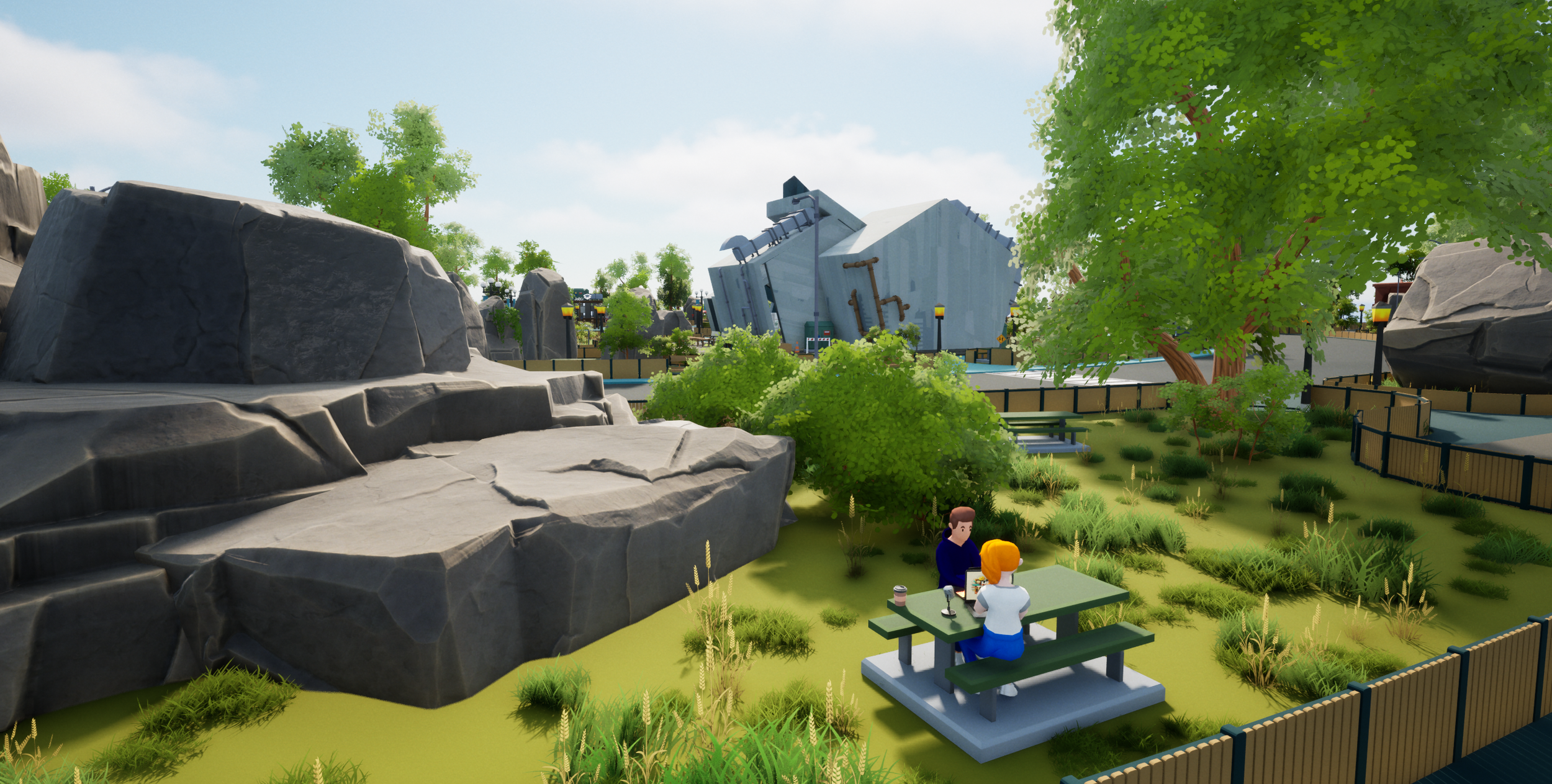

Owned the animation pipeline from performance to engine. I personally choreographed and enacted motion capture sessions to create diverse NPC behaviors and cinematic sequences.

Created the "Drone Tour" intro sequence, animating a custom character interaction where a drone is grabbed out of mid-air and used as a selfie-cam to introduce the user to the campus.

Implemented real-time lip-sync for both text and voice-driven NPC interactions.

The Result Despite a high-pressure 3.5-month development window, the project successfully demonstrated complex platform capabilities—merging procedural generation, generative AI, and high-fidelity character animation into a cohesive, functional demo.

My work on this project is finished as of 04/17/2024, Good luck to those who follow in my footsteps, I wish you the best of luck.

Images below are in order of progression